Visions

Visions

Panmnesia's CXL-Enabled AI Accelerator (CES 2024 Innovation Award Winner)

[CXL Forum '23] Panmnesia - A SW-HW Framework for AI-Driven ANNS Utilizing CXL Memory Pool

Demo at SC23: HPC Applications on Panmnesia's CXL 3.0 All-in-One Framework

Cache in Hand: Expander-Driven CXL Prefetcher for Next Generation CXL-SSD

Experience Reality: Panmnesia’s Real-World, Multi-Terabyte CXL Memory Disaggregation Live at FMS 23

TrainingCXL: Failure Tolerant Training with Persistent Memory Disaggregation over CXL

CXL 2.0 End-to-End System

CXL Forum at FMS'22: CXL-SSD

CXL Forum at FMS'22: CXL 2.0 End-to-End Solution

Technologies

Technologies

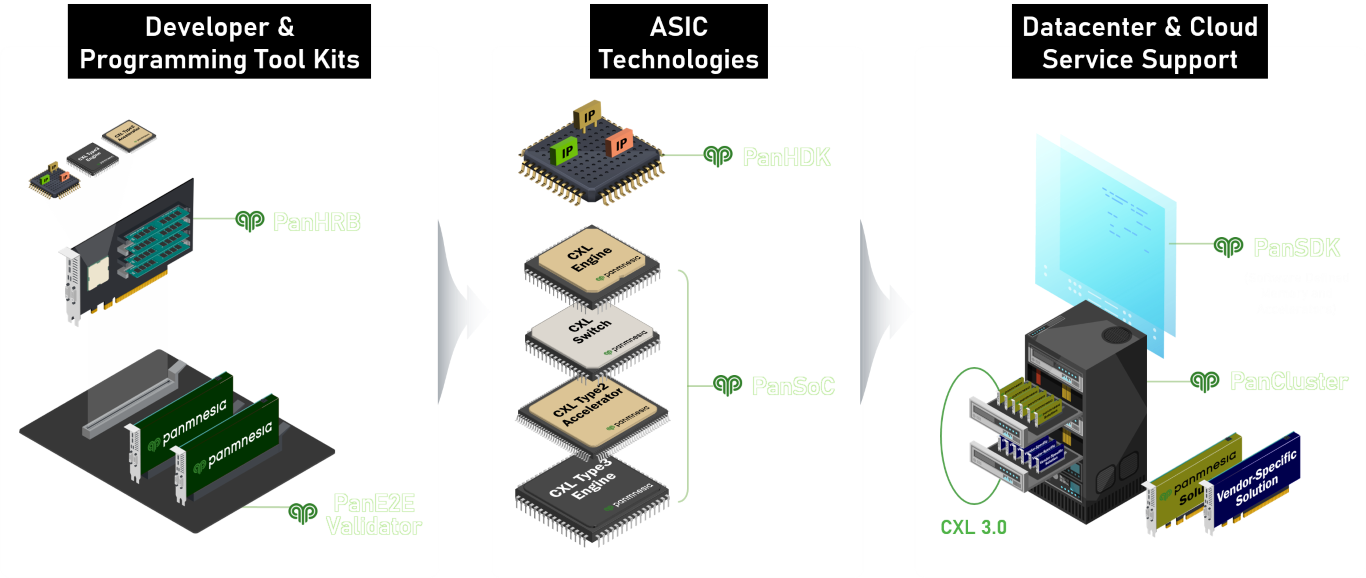

The Panmnesia Hardware Development Toolkit (PanHDK) and Hardware Reference Board (PanHRB) are reliable and system-validated development vehicles to make your CXL development faster. PanHDK/HRB provides an environment that allows you to integrate Panmnesia’s state-of-the-art CXL logic into your hardware, such as CPU, co-processors, domain-specific/ML accelerators, and memory controllers, at the register-transfer level (RTL). The development results of PanHDK/PanHRB are compatible with any type of CXL components and devices. Fab-less and Silicon companies can have their own CXL development environment with the collaboration of Panmnesia.

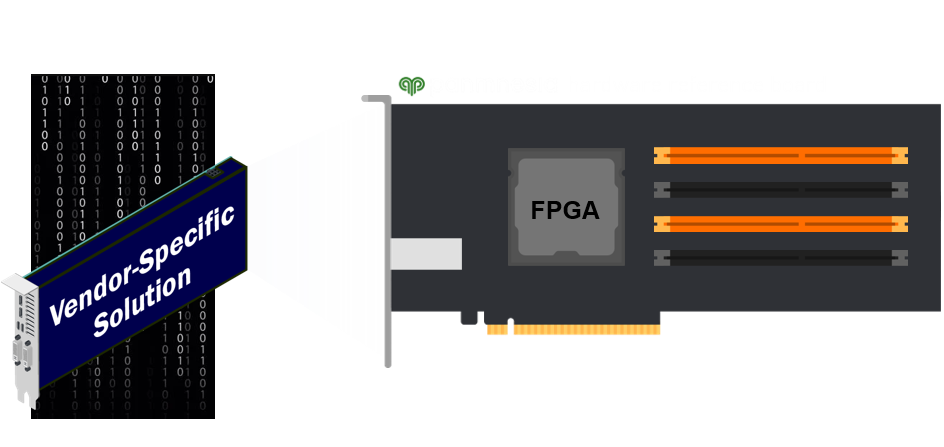

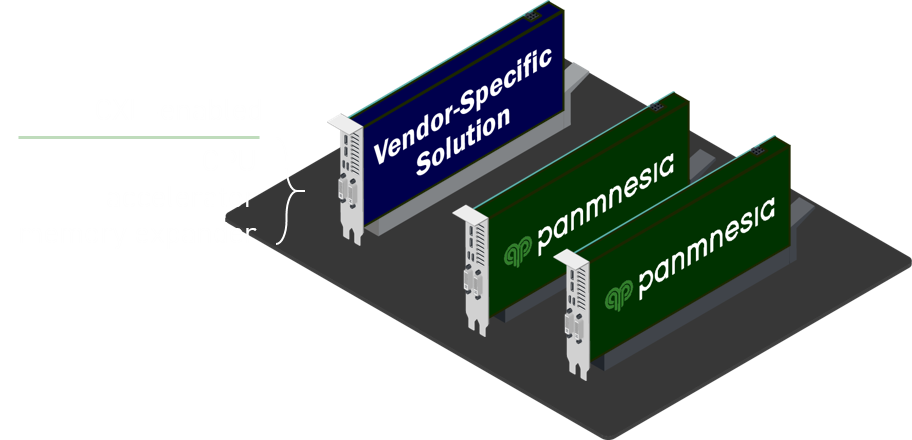

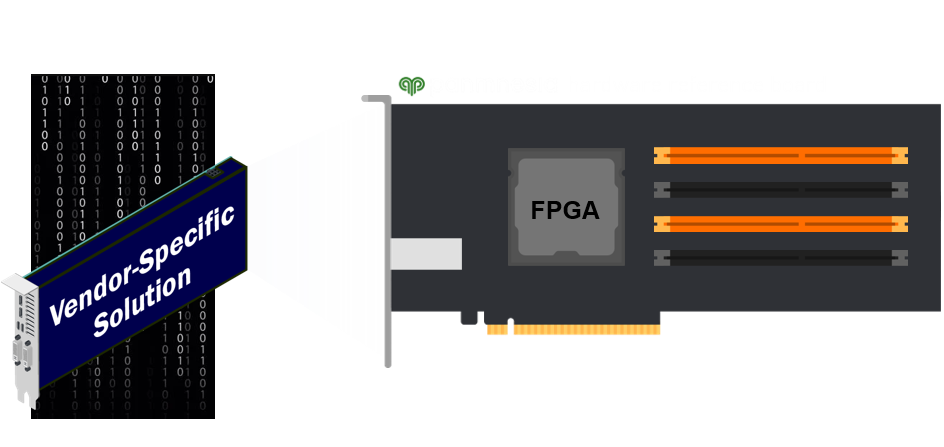

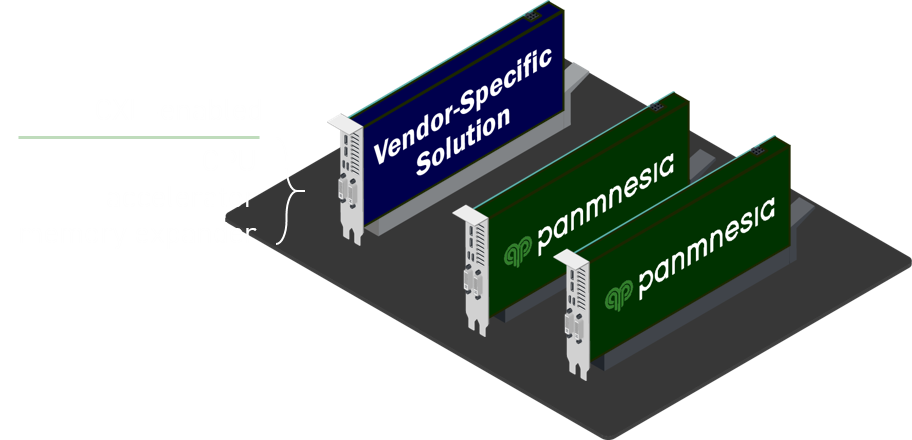

The Panmnesia End-to-End (PanE2E) validator helps you verify, validate, and integrate their vendor-specific solutions into a CXL system in a fast and efficient manner. Even though you do not have the entire hardware components, you can do verify and validate all the functionalities and features that your device has to support with our PanE2E. For example, a hardware company has CXL memory expanders but cannot validate them without supporting CPU vendors and switches (and vice versa). Our PanE2E allows you to have the upper hand and make your CXL devices/prototypes verified and validated systems without vendor lock-in.

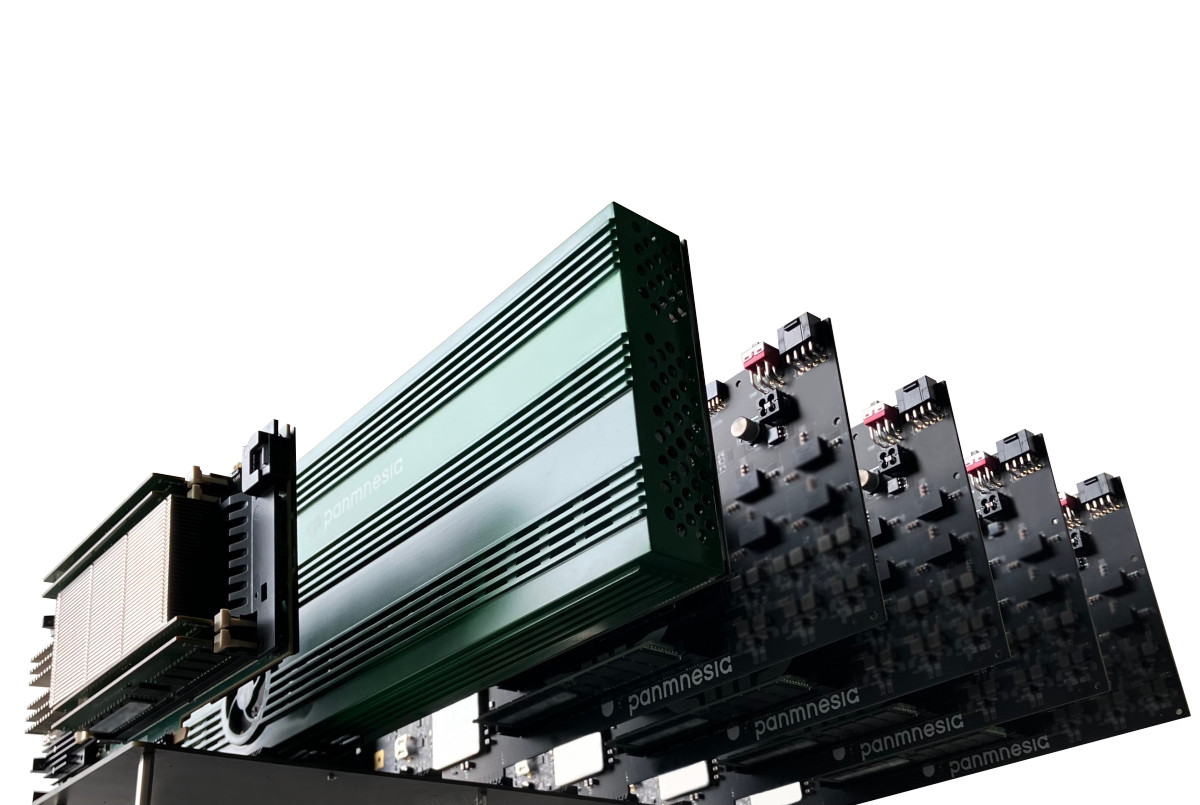

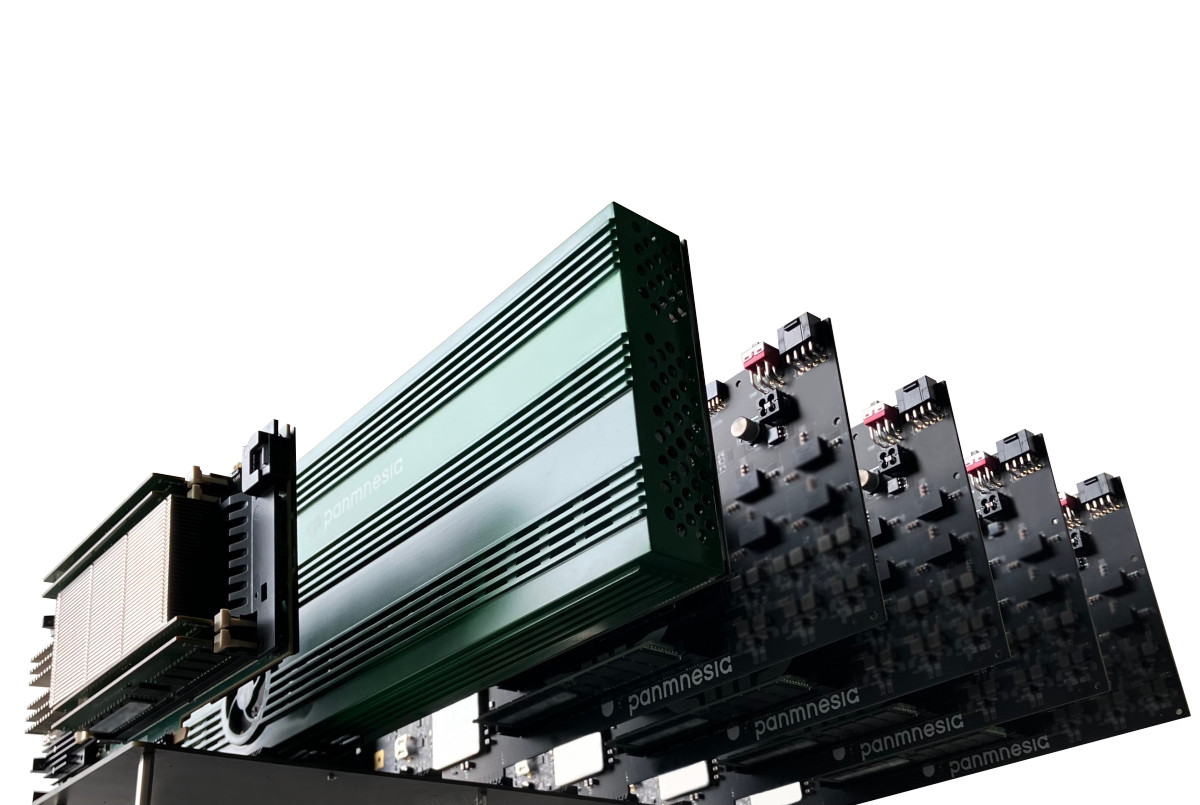

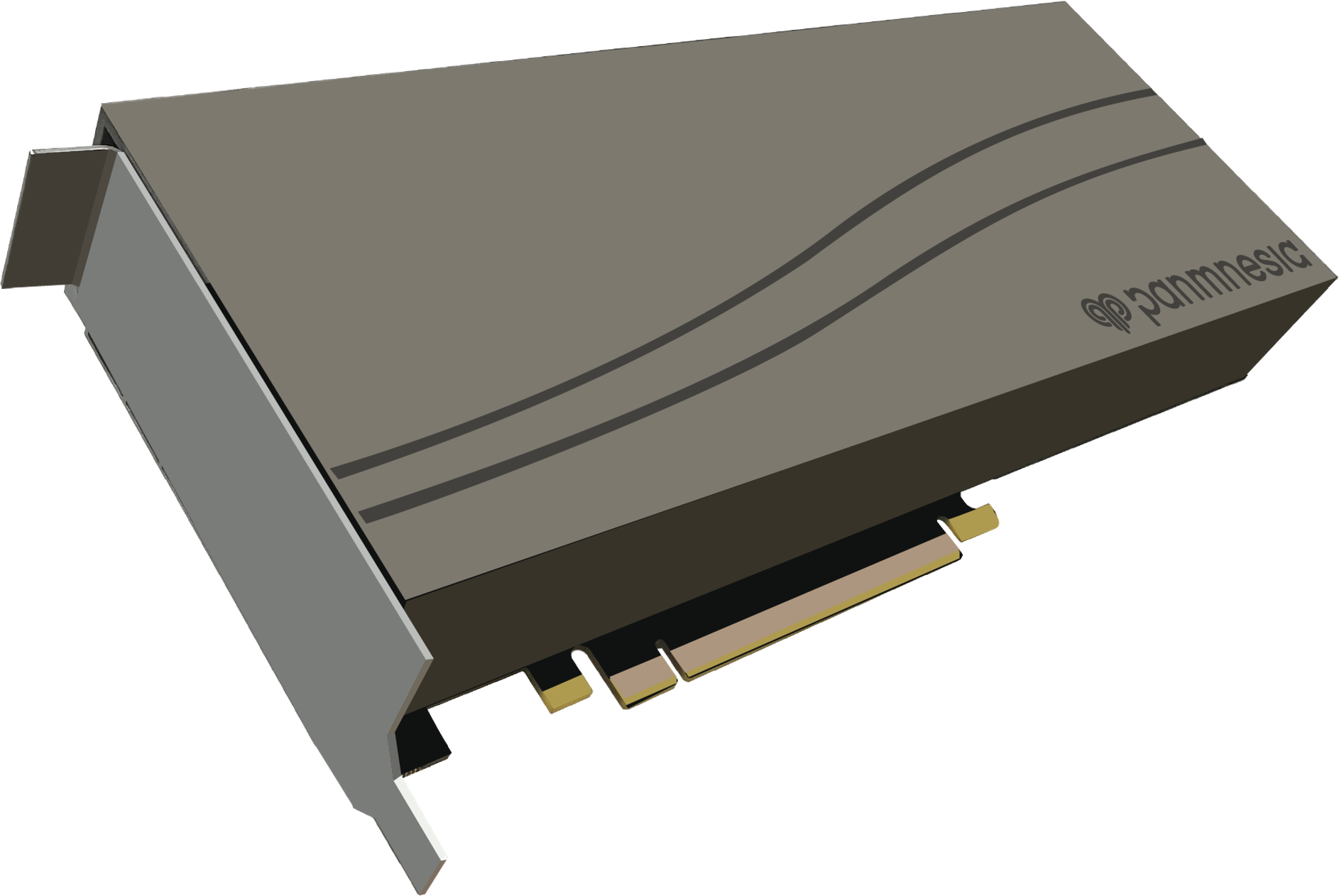

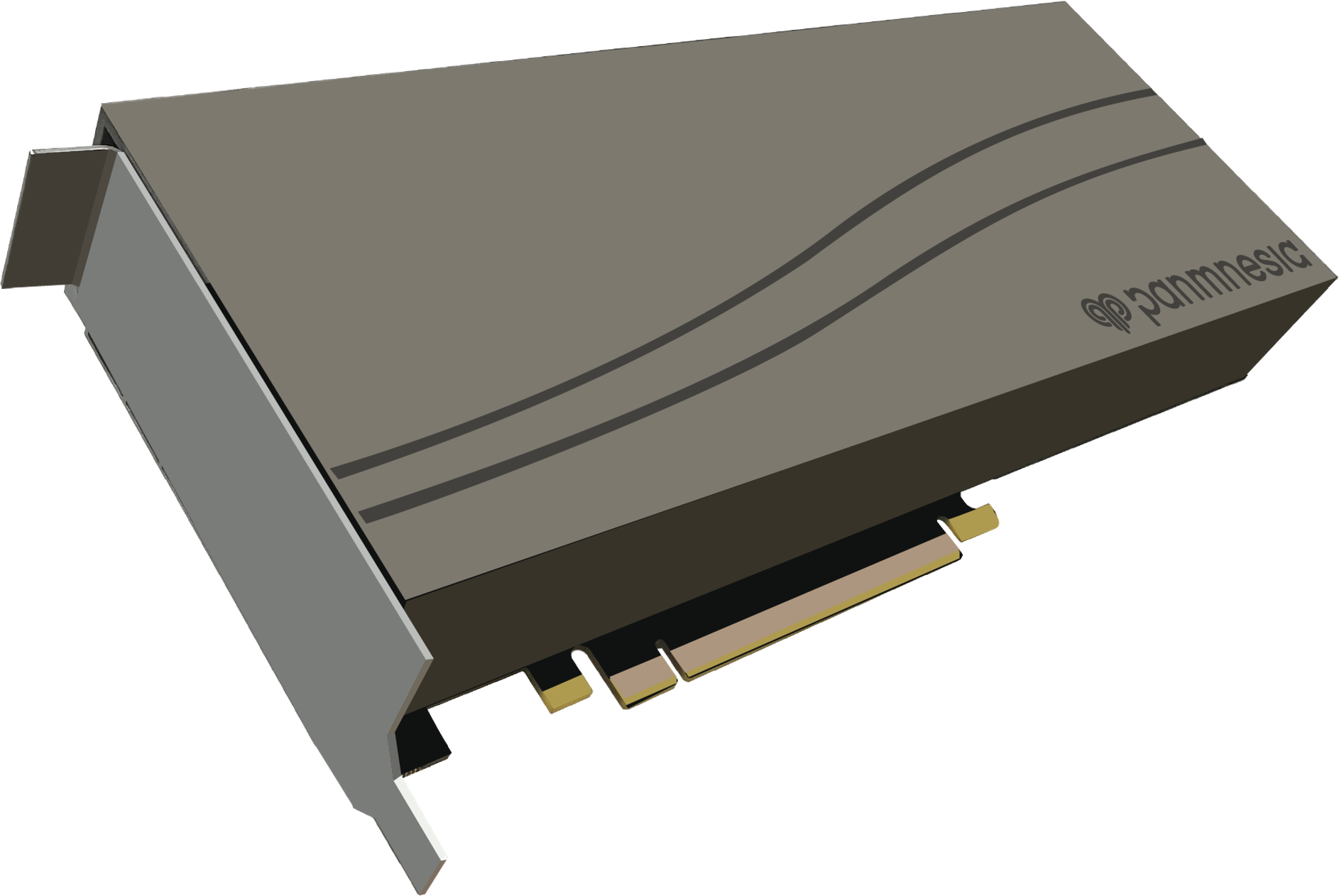

The Panmnesia CXL-enabled AI accelerator offers hardware acceleration for large-scale AI services. PanAccelerator differs itself from conventional AI accelerator by its composability of memory resources using CXL. Panmnesia's cache coherent interconnect controller technologies disaggregates compute and memory resources for AI accelerator, enabling fast and low-cost large-scale AI services. Our accelerator also features a powerful compute unit optimized for parallel vector/tensor processing for AI services. Lower your cost for large-scale AI deployment without compromising performance.

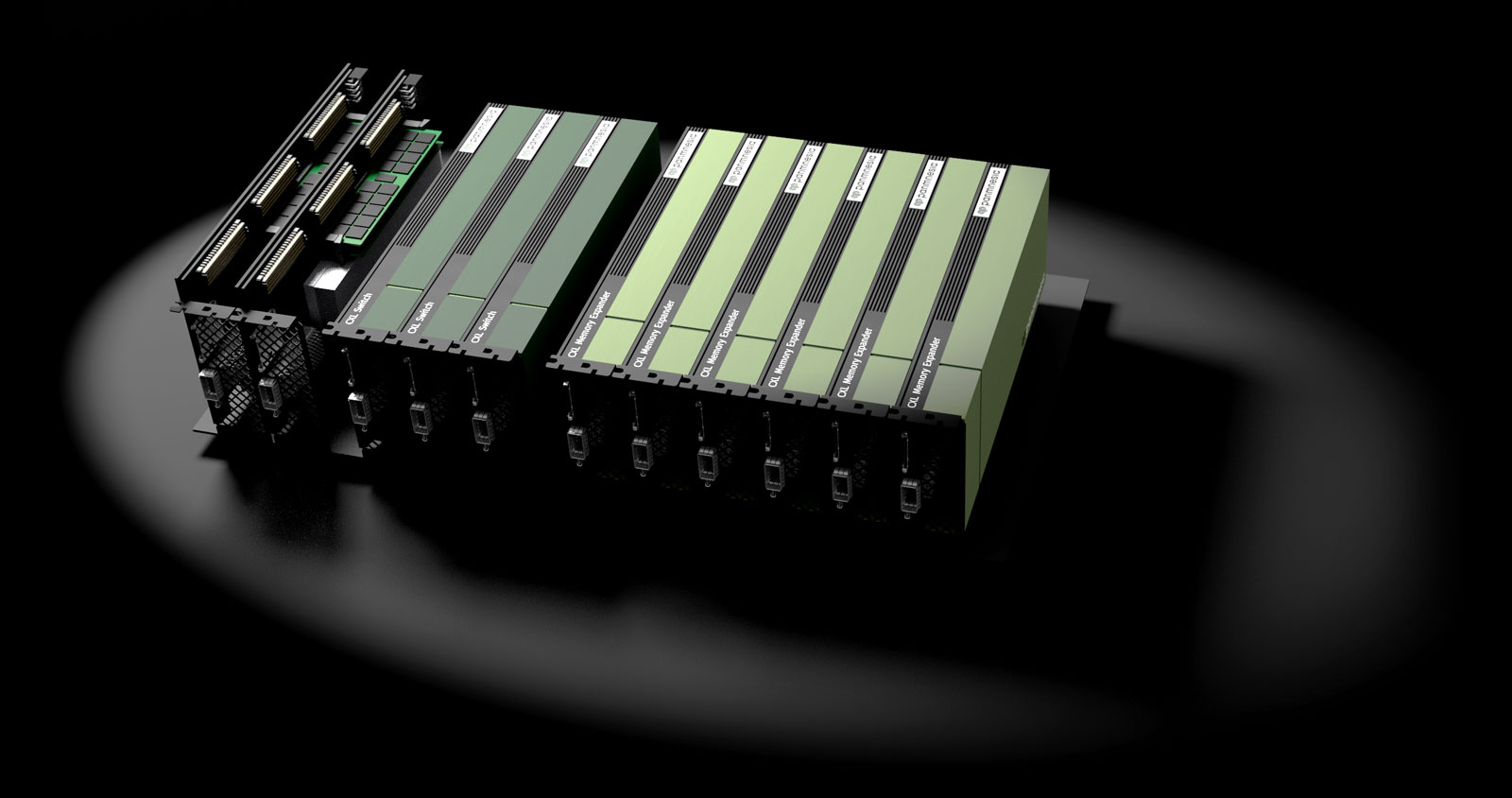

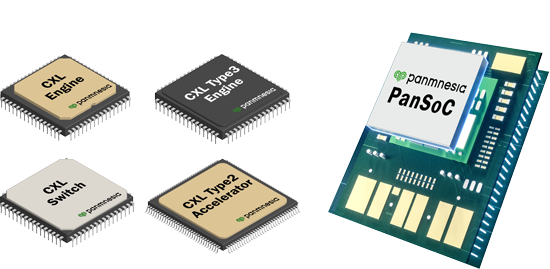

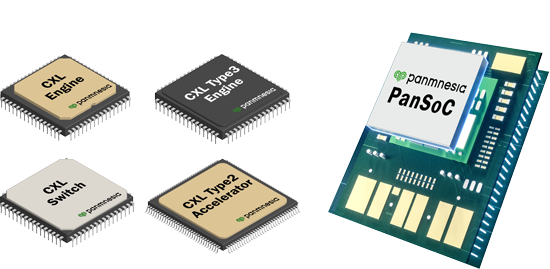

The Panmnesia System-on-Chip (PanSoC) is a holistic compute express link (CXL) infrastructure, including CPU-to-device, CPU-to-memory, and device-to-memory connections for hyperscalers. PanSoC offers extremely scalable, high-performance CXL switches and interconnect networks that even have intelligent near-data processing units (DPU) and processing-in-memory (PIM) units. PanSoC includes a set of hardware IP licenses for several CXL agents and engines, which can allow existing devices such as processors, diverse accelerators, and memory to be easily connected to CXL. PanSoC’s agents/engines can be integrated into the following devices by empowering CXL:

The Panmnesia Hardware Development Toolkit (PanHDK) and Hardware Reference Board (PanHRB) are reliable and system-validated development vehicles to make your CXL development faster. PanHDK/HRB provides an environment that allows you to integrate Panmnesia’s state-of-the-art CXL logic into your hardware, such as CPU, co-processors, domain-specific/ML accelerators, and memory controllers, at the register-transfer level (RTL). The development results of PanHDK/PanHRB are compatible with any type of CXL components and devices. Fab-less and Silicon companies can have their own CXL development environment with the collaboration of Panmnesia.

The Panmnesia End-to-End (PanE2E) validator helps you verify, validate, and integrate their vendor-specific solutions into a CXL system in a fast and efficient manner. Even though you do not have the entire hardware components, you can do verify and validate all the functionalities and features that your device has to support with our PanE2E. For example, a hardware company has CXL memory expanders but cannot validate them without supporting CPU vendors and switches (and vice versa). Our PanE2E allows you to have the upper hand and make your CXL devices/prototypes verified and validated systems without vendor lock-in.

The Panmnesia CXL-enabled AI accelerator offers hardware acceleration for large-scale AI services. PanAccelerator differs itself from conventional AI accelerator by its composability of memory resources using CXL. Panmnesia's cache coherent interconnect controller technologies disaggregates compute and memory resources for AI accelerator, enabling fast and low-cost large-scale AI services. Our accelerator also features a powerful compute unit optimized for parallel vector/tensor processing for AI services. Lower your cost for large-scale AI deployment without compromising performance.

The Panmnesia System-on-Chip (PanSoC) is a holistic compute express link (CXL) infrastructure, including CPU-to-device, CPU-to-memory, and device-to-memory connections for hyperscalers. PanSoC offers extremely scalable, high-performance CXL switches and interconnect networks that even have intelligent near-data processing units (DPU) and processing-in-memory (PIM) units. PanSoC includes a set of hardware IP licenses for several CXL agents and engines, which can allow existing devices such as processors, diverse accelerators, and memory to be easily connected to CXL. PanSoC’s agents/engines can be integrated into the following devices by empowering CXL:

Solutions

Solutions

Locations

Locations