Panmnesia successfully hosted ‘CXL Tech Day 2024’ on January 19th in Daejeon, South Korea, drawing an audience of around a hundred students and reporters who are interested in semiconductor technology.

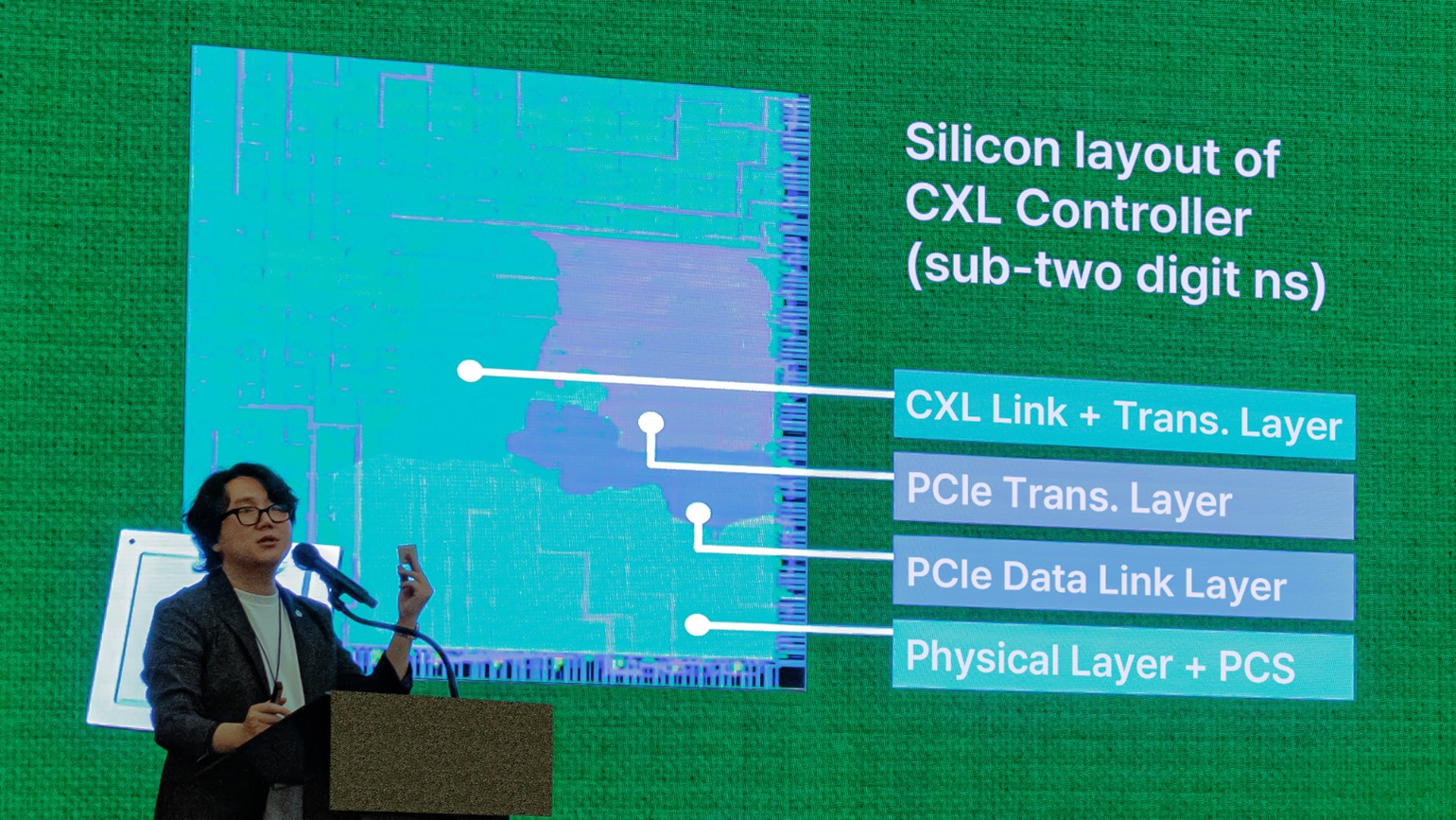

During the event, leading engineers in the field of Compute Express Link (CXL) discovered the significance of CXL, delved into the distinctions of CXL 3.0 compared to its predecessors, and explored how CXL technology can revolutionize datacenter architecture. The engineers also shared cutting-edge technologies and research topics related to CXL. Notable presentations included Miryeong Kwon, Chief Strategy Officer (CSO) of Panmnesia, elucidating Panmnesia’s AI acceleration framework built upon the CXL Type2 device. Hanjin Choi, Assistant Director of Panmnesia, unveiled the company’s solution to accelerate practical supercomputing applications which is based on CXL 3.0/3.1 hardware framework. Lastly, Seonghyeon Jang, Assistant Director of Panmnesia, introduced Panmnesia’s cost-efficient, petabyte-scale memory expansion solution based on CXL-SSD technology.

Additionally, Myoungsoo Jung, CEO of Panmnesia, highlighted the recent accomplishments of the company and outlined plans for the new year. Noteworthy among the reported achievements was Panmnesia’s active engagement in collaborations and discussions with over twenty global IT companies. The company is poised to expand its business model and expedite recruitment initiatives, leveraging the strategic positioning of their new offices in both Daejeon and Seoul.

This event has garnered attention from reputable Korean publications. For those interested, additional details can be found in the articles (link below).

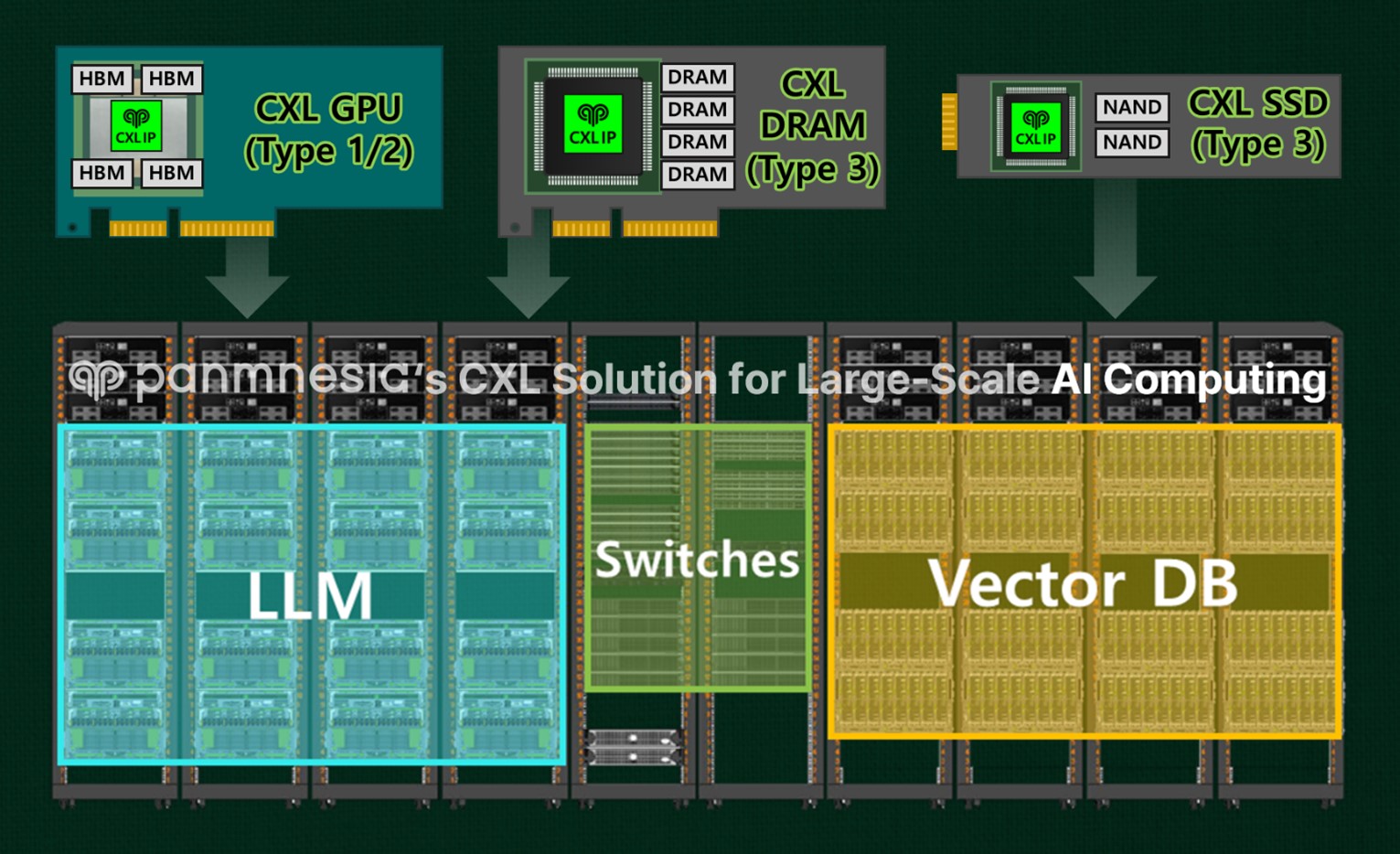

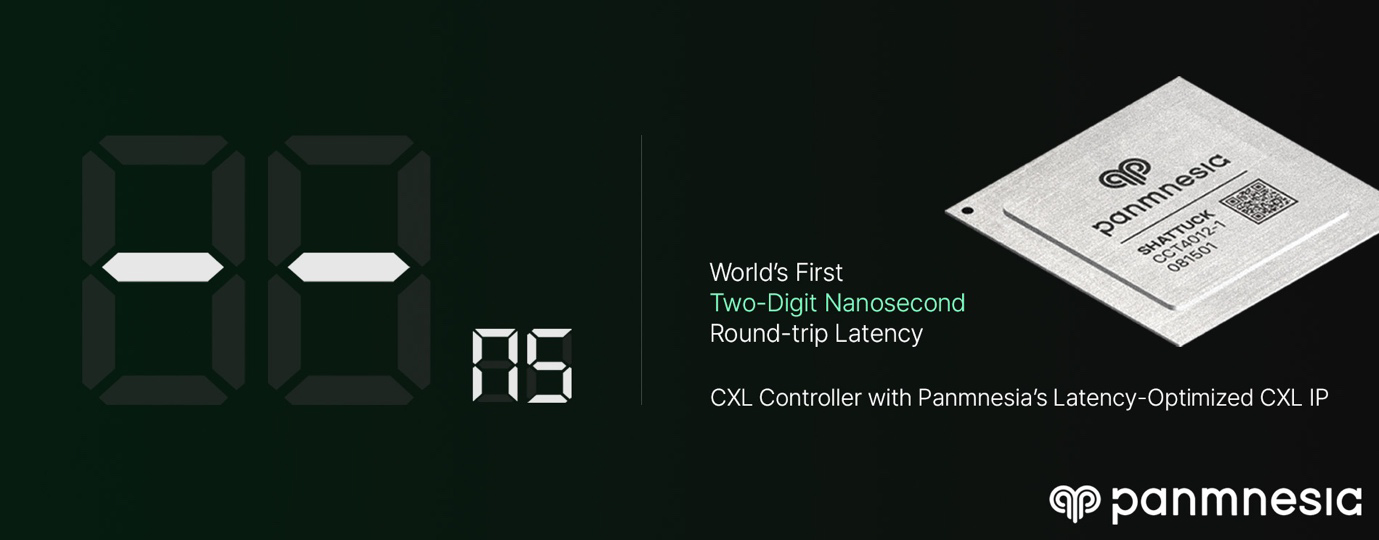

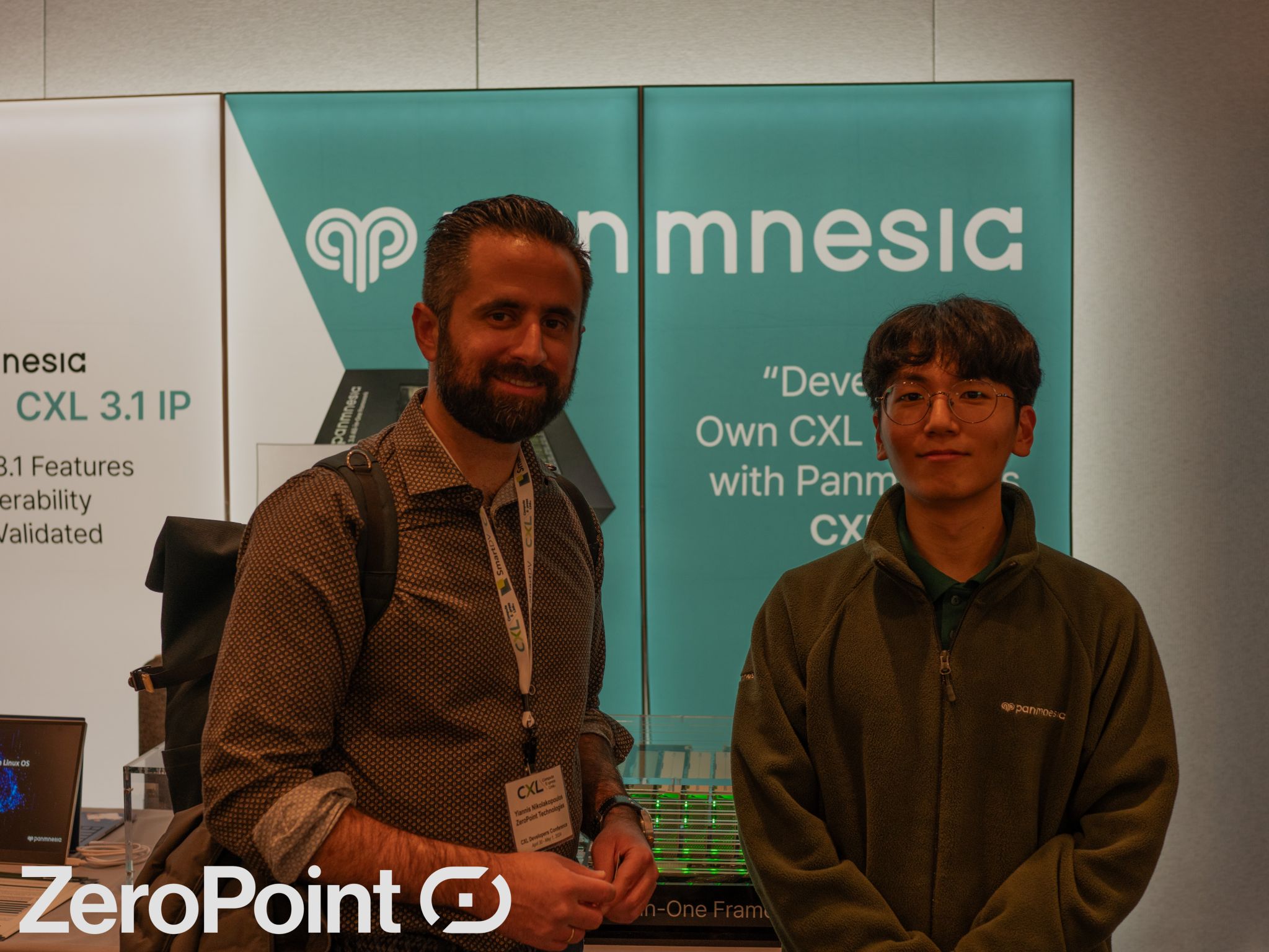

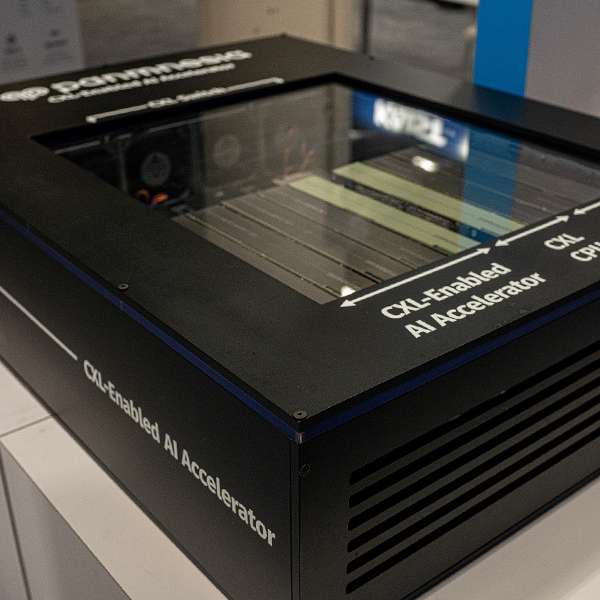

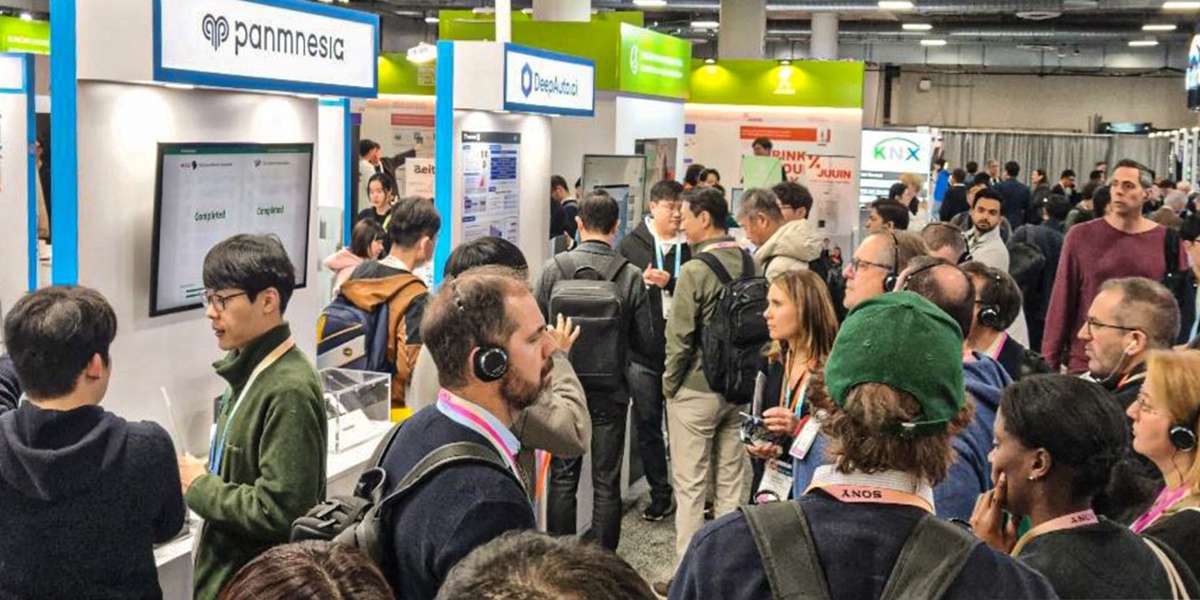

A cutting-edge CXL IP startup, Panmnesia, unveiled its revolutionary demo of CXL-Enabled AI accelerator empowering large-scale AI at CES 2024. The accelerator has been recognized by the CES 2024 Innovation Award for its 101x superior performance over the existing AI acceleration system.

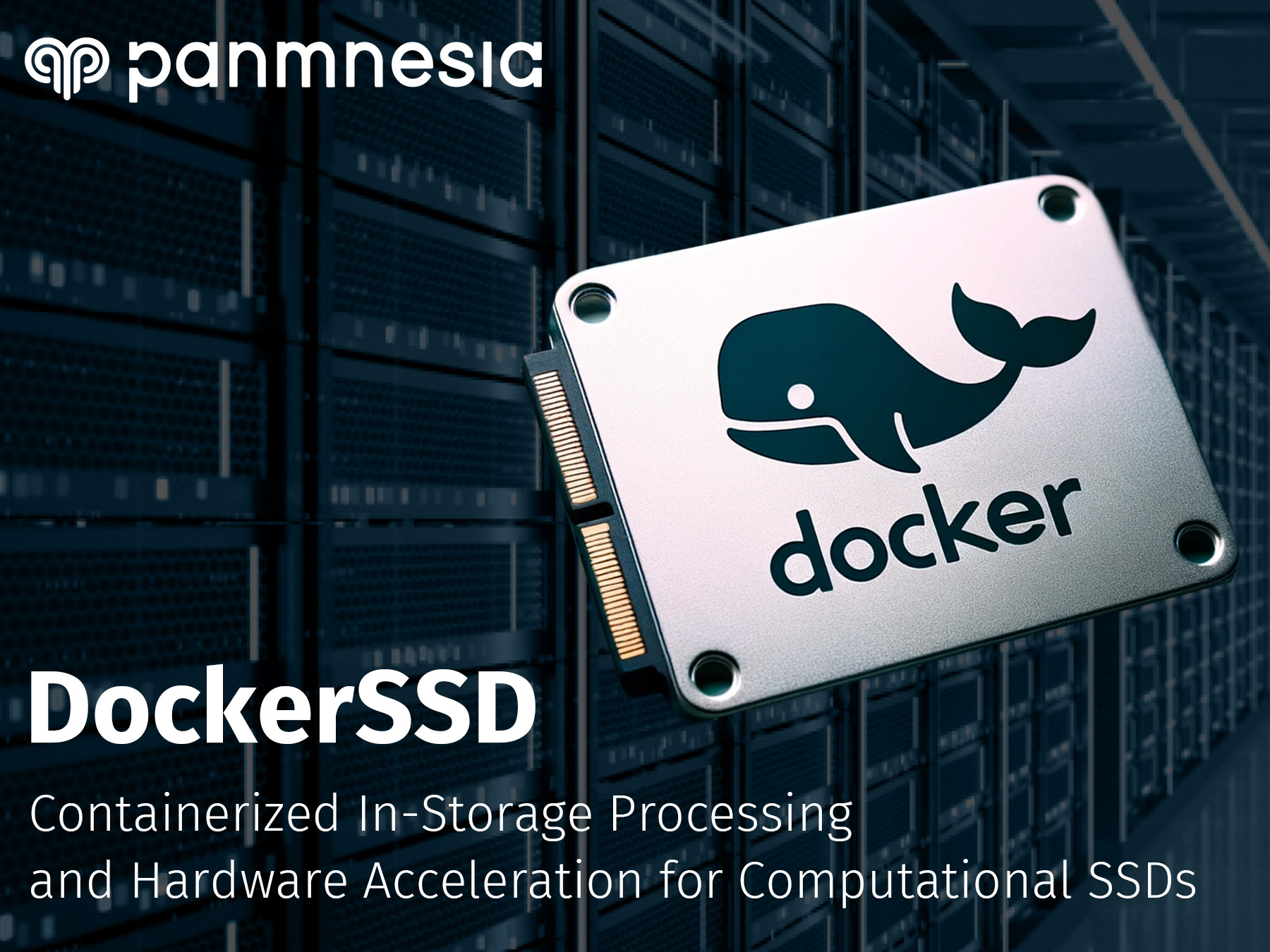

Panmnesia has developed DockerSSD, a technology that allows storage devices to be utilized as independent server that processes data without computer.

The DockerSSD employs Docker, a widely used operating system-level virtualization software, to provide a complete environment for executing user-level applications. Such a complete environment empowers the users to easily leverage NDP (Near Data Processing) technology. The evaluation result showed that DockerSSD accelerates the execution by 2x and reduces the power consumption by 2x.

NDP technology has been discussed for decades as a solution to accelerate data processing by minimizing data movement. To leverage the advantage of NDP, there were numerous approaches from memory/storage vendors. However, these approaches have not been widely deployed due to their poor usability. This is because the vendors did not want their internal hardware and software disclosed. Instead, they have provided their internal environments through different interfaces, forcing applications to be modified to fit these interfaces.

To enhance the usability of NDP technology, Panmnesia has developed DockerSSD, a new way of NDP that employs Docker inside the storage device. Docker is an operating system-level virtualization software that provides all the necessary environments for user applications running on the operating system. By leveraging Docker, DockerSSD can implement an environment used in a host within the storage device. This allows users to run their application in storage, without being bounded by vendor-specific interfaces. Since this work relieves a significant burden on the users while providing high performance and high energy efficiency, Panmnesia expects this work to be widely adopted in growing data centers.

The paper will be presented in March at ‘IEEE International Symposium on High Performance Computer Architecture, (HPCA) 2024’ held in Edinburgh, Scotland.

Panmensia, a CXL Intellectual Property (IP) leader, announced that it has been bestowed with the CES 2024 Innovation Award for its pioneering CXL-enabled AI accelerator. This recognition came ahead of CES 2024, the world’s premier technology event, set to unfold in Las Vegas.

The CES Innovation Awards program is the pinnacle of recognition at CES, bestowed by the Consumer Technology Association (CTA), the event’s owner and producer. Each year, the program curates products from across the globe, seeking out the very best in technology, design, and innovation. Panmnesia won the award for its CXL-enabled AI Accelerator, which will be exhibited at the upcoming CES 2024 event.

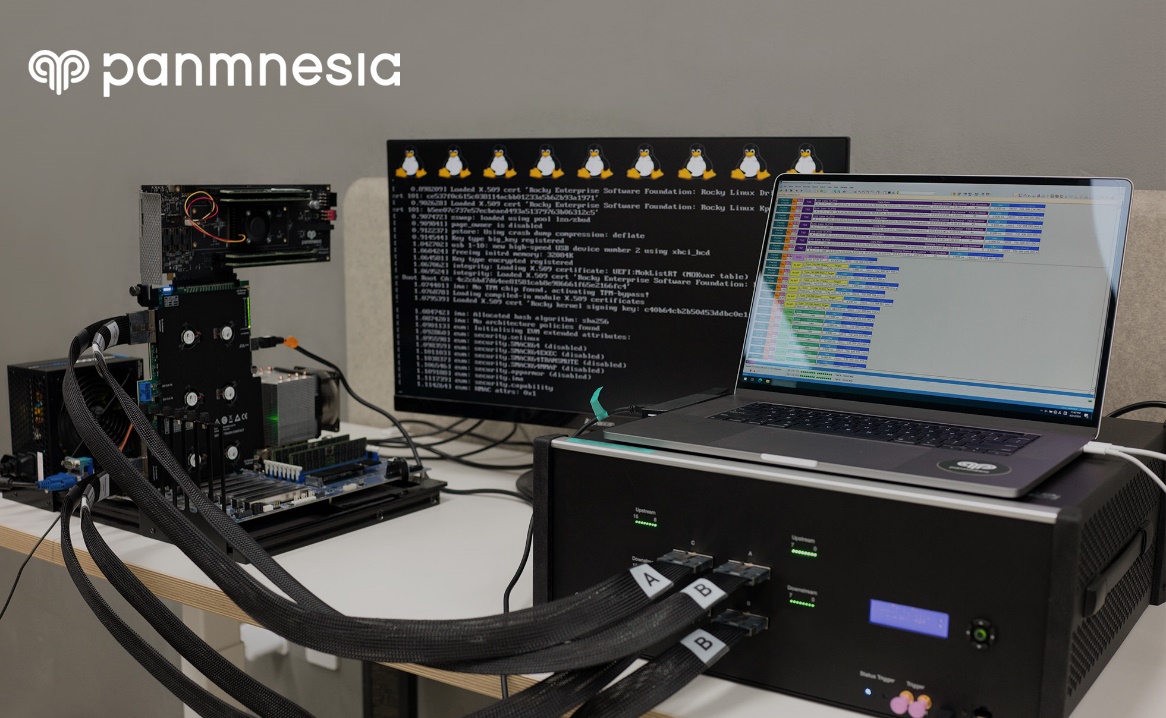

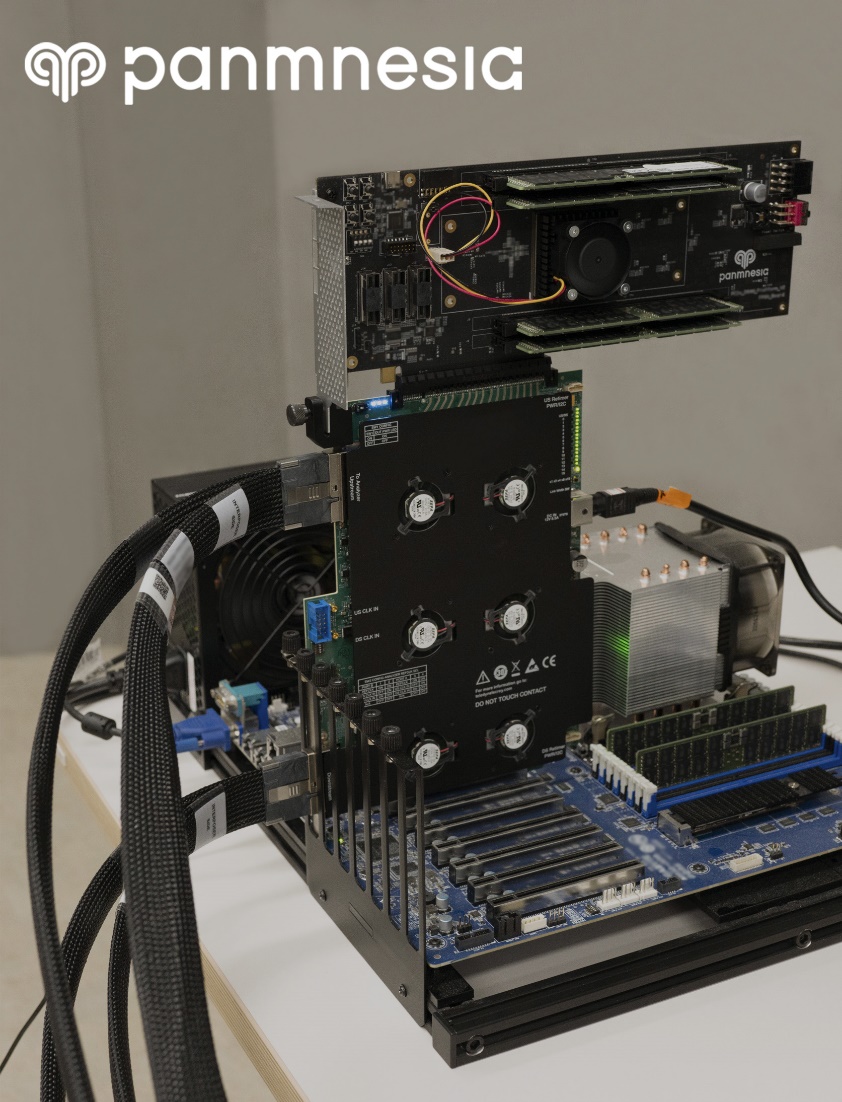

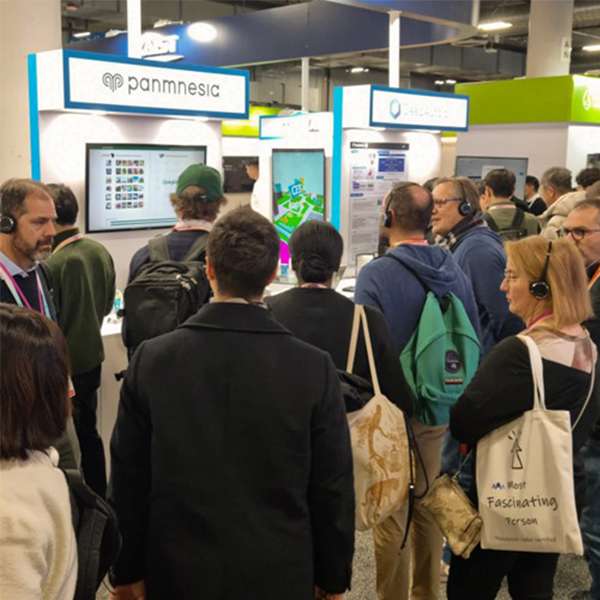

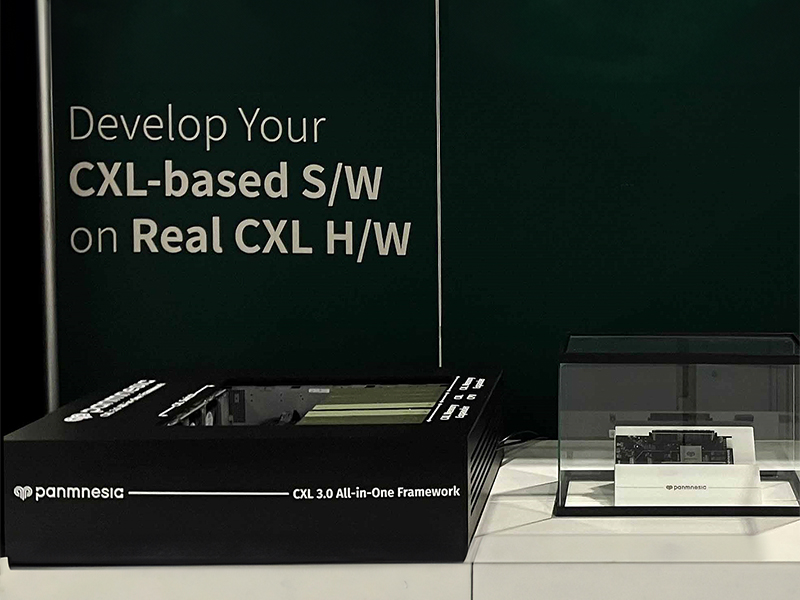

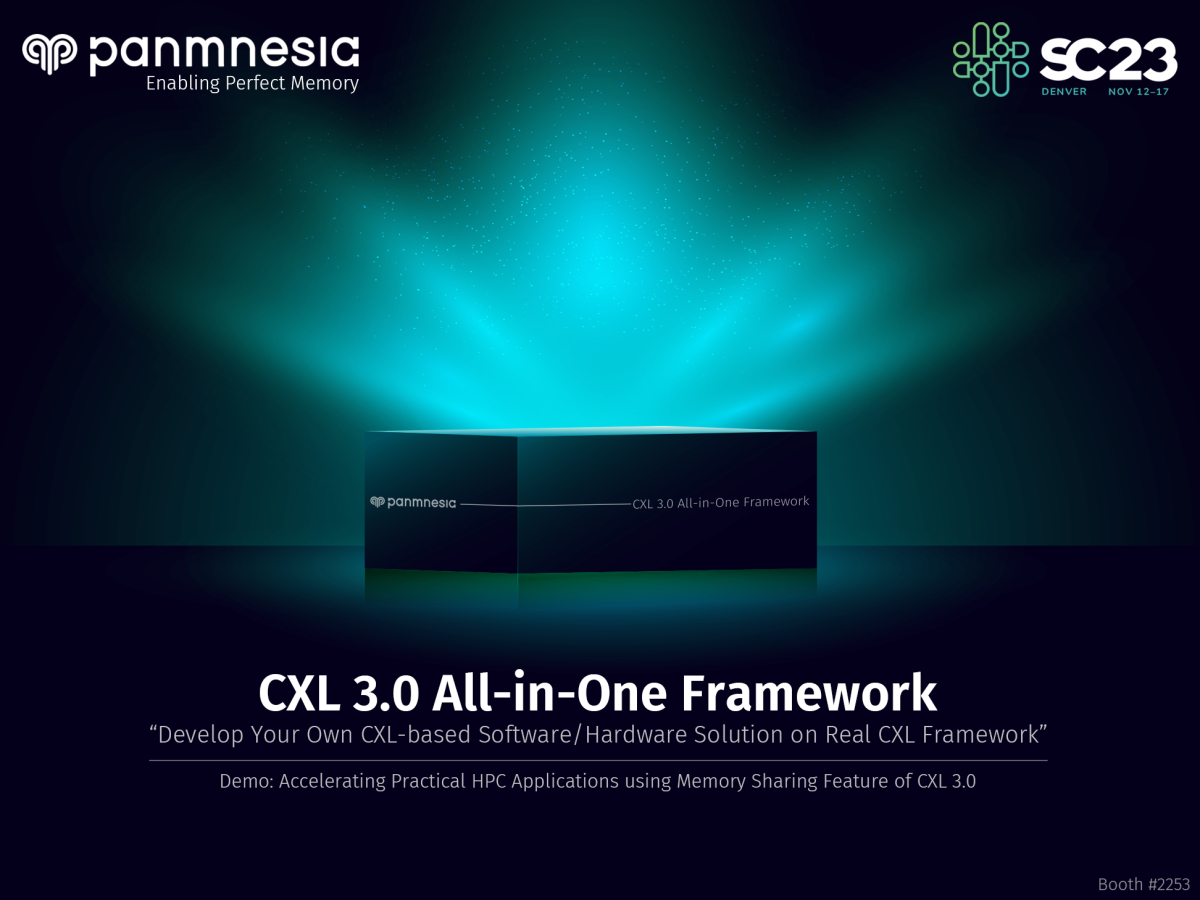

At the Supercomputing Conference 2023 (SC23), Panmnesia, a leader in CXL Intellectual Property, unveiled the pioneering CXL 3.0 All-in-One Framework. SC23, a pivotal event in the HPC industry, was hosted at the Colorado Convention Center from November 14-16, drawing key players like Google, AWS, and Microsoft.

Compute eXpress Link (CXL), a cornerstone of Panmnesia’s expertise, is an interface technology that connects an array of system devices, enabling on-demand memory utilization and cost-efficient memory management for HPC and data center environments.

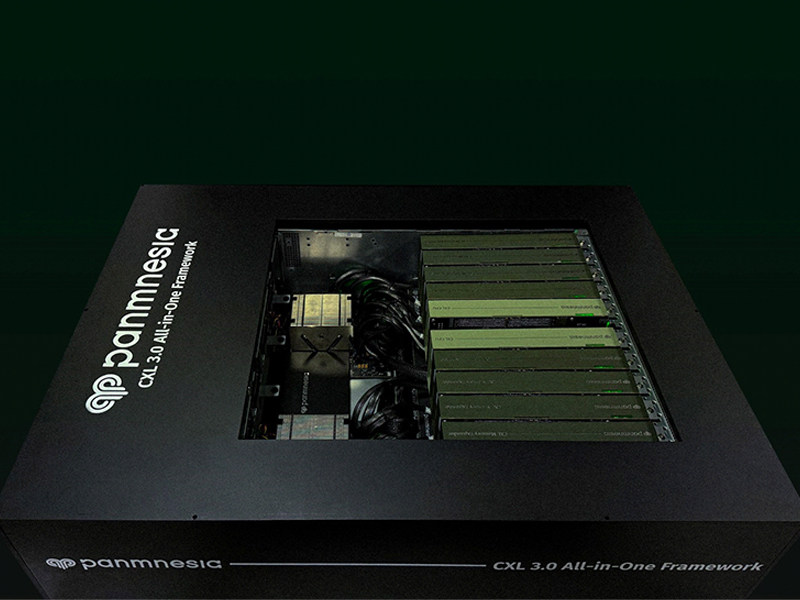

Panmnesia’s CXL 3.0 All-in-One Framework

Panmnesia’s latest endeavor, the CXL 3.0 All-in-One Framework, was a highlight at SC23, showcasing their ongoing dedication to CXL technology innovation. Dr. Myoungsoo Jung, the CEO and founder of Panmnesia, expressed his enthusiasm for the framework’s launch. “We’re thrilled to introduce our renewed framework,” he said, highlighting the framework’s enhanced internal architecture and advanced CXL 3.0 IPs. This development aims to provide an efficient and comprehensive environment for customers to develop custom CXL software and hardware solutions.

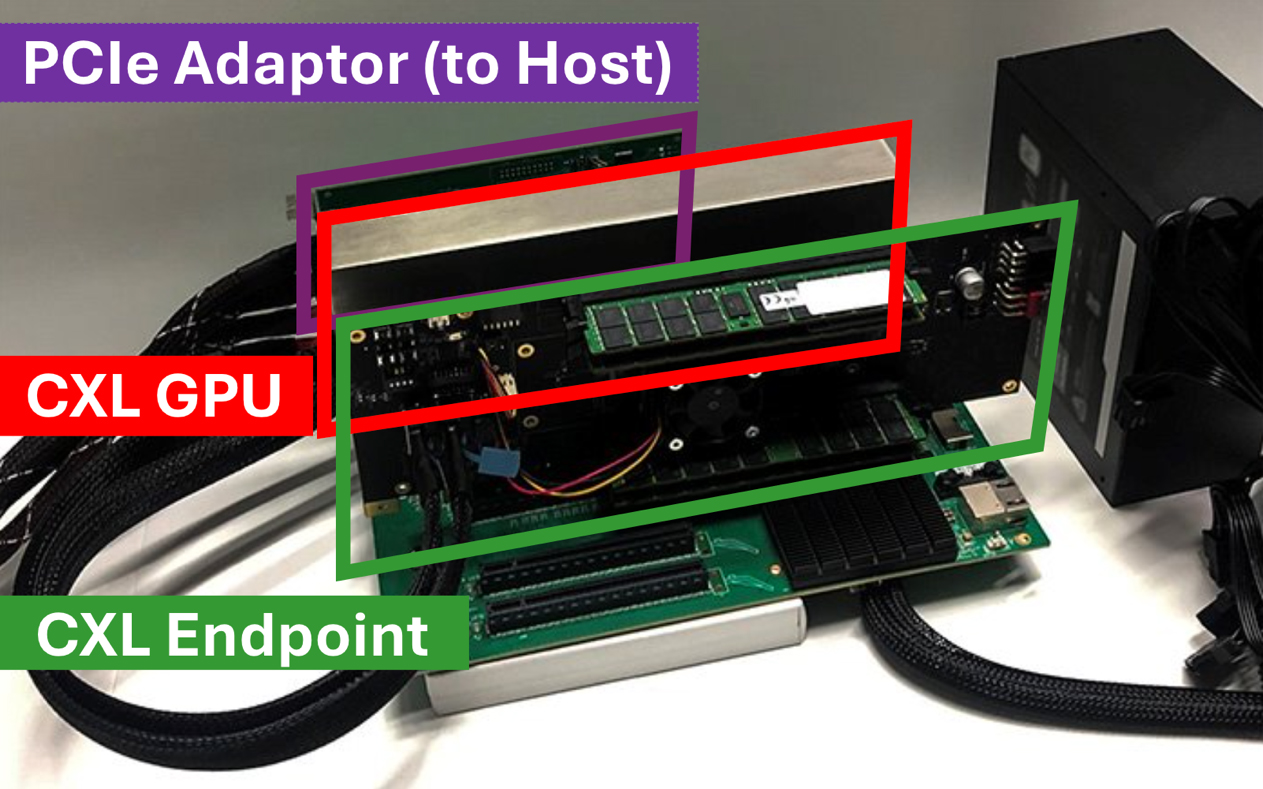

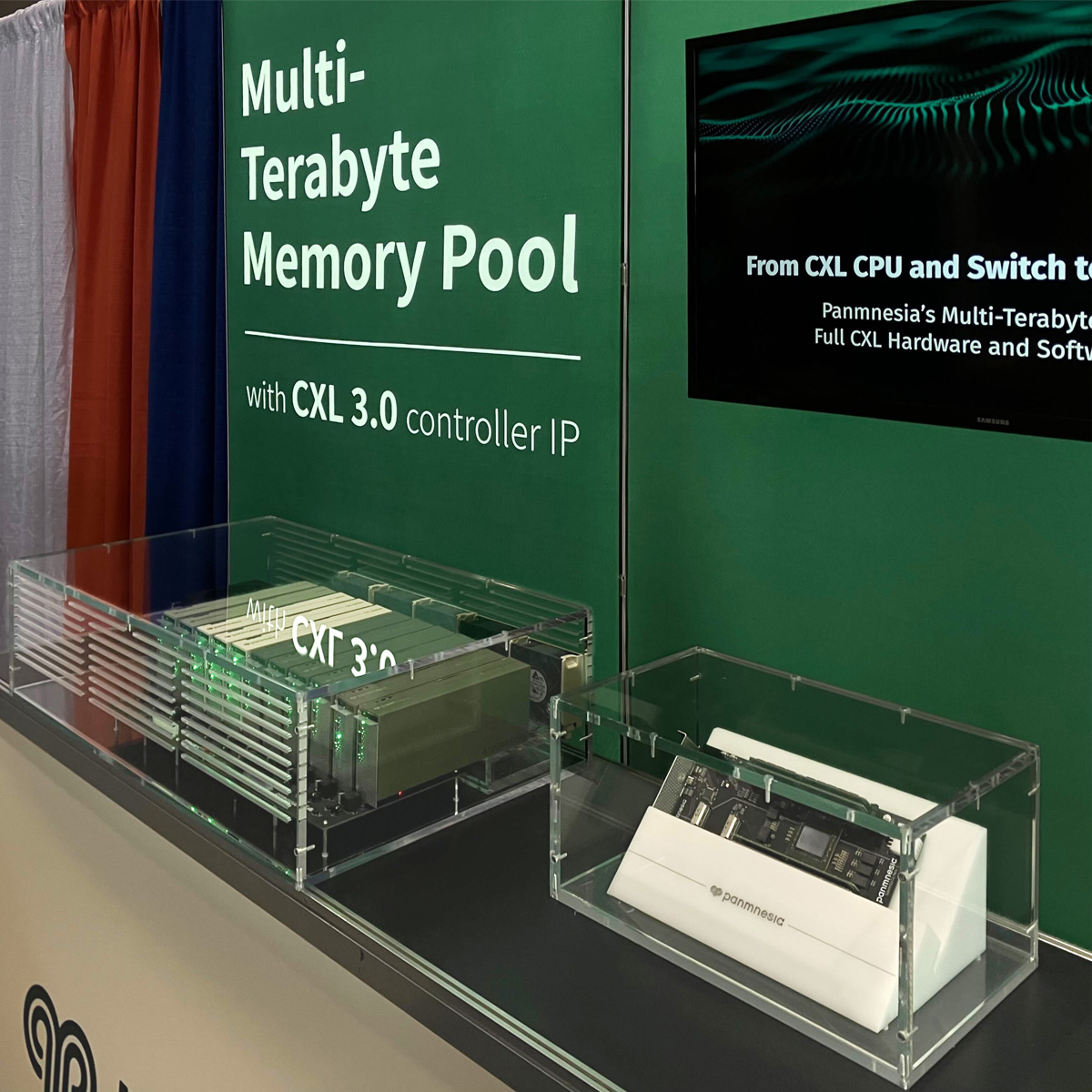

This framework features a full array of both hardware and software components, meticulously designed for robust development processes. The hardware suite boasts state-of-the-art CXL 3.0 devices, including a CXLenabled CPU, a CXL switch, and various CXL endpoints. These devices are intricately constructed using Panmnesia’s proprietary CXL 3.0 IPs and are fully compatible with all CXL protocols, such as CXL.io, CXL.cache, and CXL.mem. Panmnesia underscores the significance of these devices, particularly their ability to leverage unique CXL 3.0 features, including memory sharing capabilities.

A pivotal component of the framework is the CXL switch, tasked with the crucial function of connecting devices according to user specifications. To enable configurable connectivity, Panmnesia has meticulously designed their CXL switch to incorporate a sophisticated software known as the fabric manager. This software is adept at managing internal routing rules within the switches. Moreover, the switch’s design is not limited to interconnecting devices within the framework; it can also extend its connectivity to include external devices or even another switch. This versatile feature allows users to effortlessly scale their framework, facilitating the construction of systems that closely resemble actual production environments.

Panmnesia’s CXL 3.0 All-in-One Framework not only provides advanced hardware but also delivers a comprehensive software stack crucial for executing user-level applications with CXL enhancements. This includes a specialized OS based on Linux, complete with CXL device drivers and a CXL-aware virtual memory subsystem, enabling applications in AI and computational science to fully utilize CXL without requiring code changes.

The framework garnered significant attention at SC23, appealing to those looking to incorporate CXL into their solutions. It received positive feedback for its holistic approach, offering both hardware and software capabilities. Software developers were particularly impressed with its readiness for user-level applications, while hardware developers valued its support for interoperability testing, a key aspect in CXL hardware development and testing. This dual appeal underscores Panmnesia’s success in providing a comprehensive, ready-to-use solution for CXL technology integration.

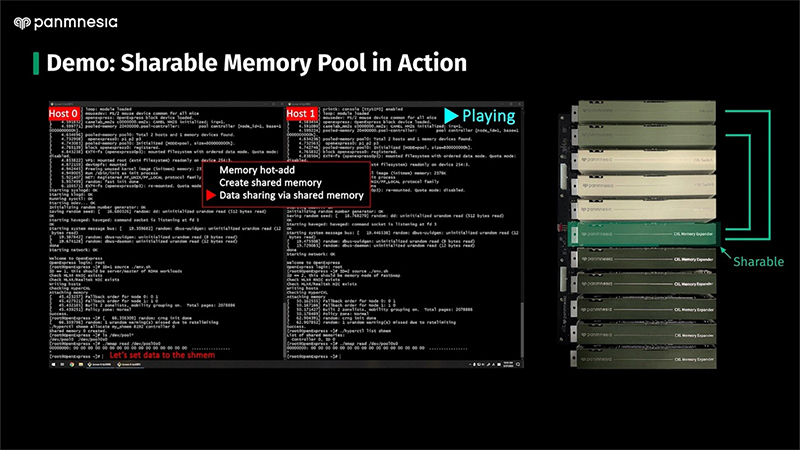

CXL 3.0 memory sharing demonstration

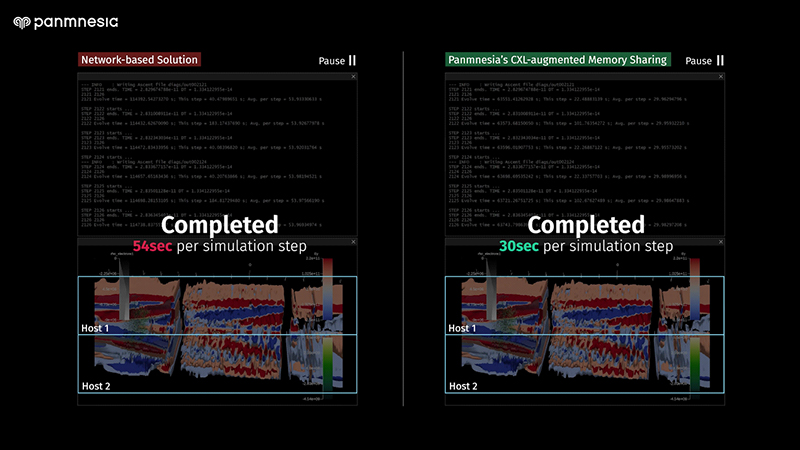

At the booth, Panmnesia showcased a demonstration of memory sharing using CXL 3.0. This was conducted using their CXL 3.0 All-in-One Framework. The demonstration focused on a plasma simulation, an HPC application, to illustrate the benefits of CXL memory sharing. This simulation involves modeling the movement of charged particles by calculating the forces between them. For efficiency, the simulation is typically parallelized by dividing the space into smaller sections and assigning these to different hosts.

In order to accurately compute the forces on a particle, the host responsible for that particle must gather information like charge and velocity from neighboring particles. These neighboring particles might be located on different hosts, necessitating data exchange among hosts. Traditional methods for this exchange rely on network communication, which can be slow due to network latency, a known issue in HPC scientific applications.

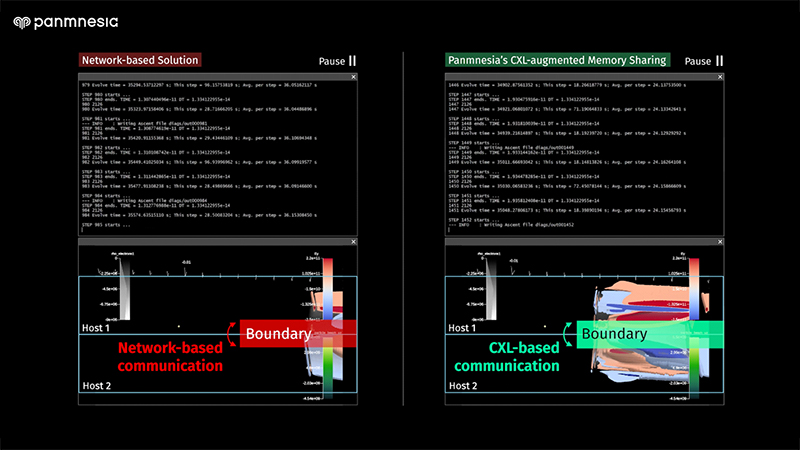

During SC23, Panmnesia introduced a solution based on CXL 3.0 that reduces the overhead of inter-host data exchange. This solution utilizes the memory sharing feature of CXL 3.0 to facilitate data exchange. It enables multiple hosts to access data stored in CXL-attached memory. Panmnesia’s approach, which automates key memory sharing functions, enhances performance significantly. They also shared details of their software implementation, which is built on a popular data exchange library.

Panmnesia highlighted the superiority of their CXL 3.0-based solution over traditional network-based methods by demonstrating a notable 1.8x speed increase. They pointed out that the utility of their solution extends beyond plasma simulation, encompassing a broad spectrum of applications such as molecular dynamics, bioinformatics, and computational fluid dynamics, making it broadly applicable in various scientific domains within HPC environments.

Panmnesia’s introduction of this framework marks a pivotal moment for the CXL ecosystem, facilitating accelerated development for both software and hardware enterprises. Furthermore, Panmnesia has solidified its position as a leader in CXL technology with this industry-first public demonstration of CXL 3.0 memory sharing in a complete system, inclusive of a CXL switch.

For those interested in exploring Panmnesia’s All-in-One framework and related demonstrations further, videos are available on Youtube (refer to the link below).

Panmnesia is excited to announce the participation in Supercomputer (SC) 2023, which will take place in Denver, Colorado, US from November 12th to November 17th. During this event, Panmnesia will unveil the groundbreaking CXL 3.0 all-in-one framework to the global audience.

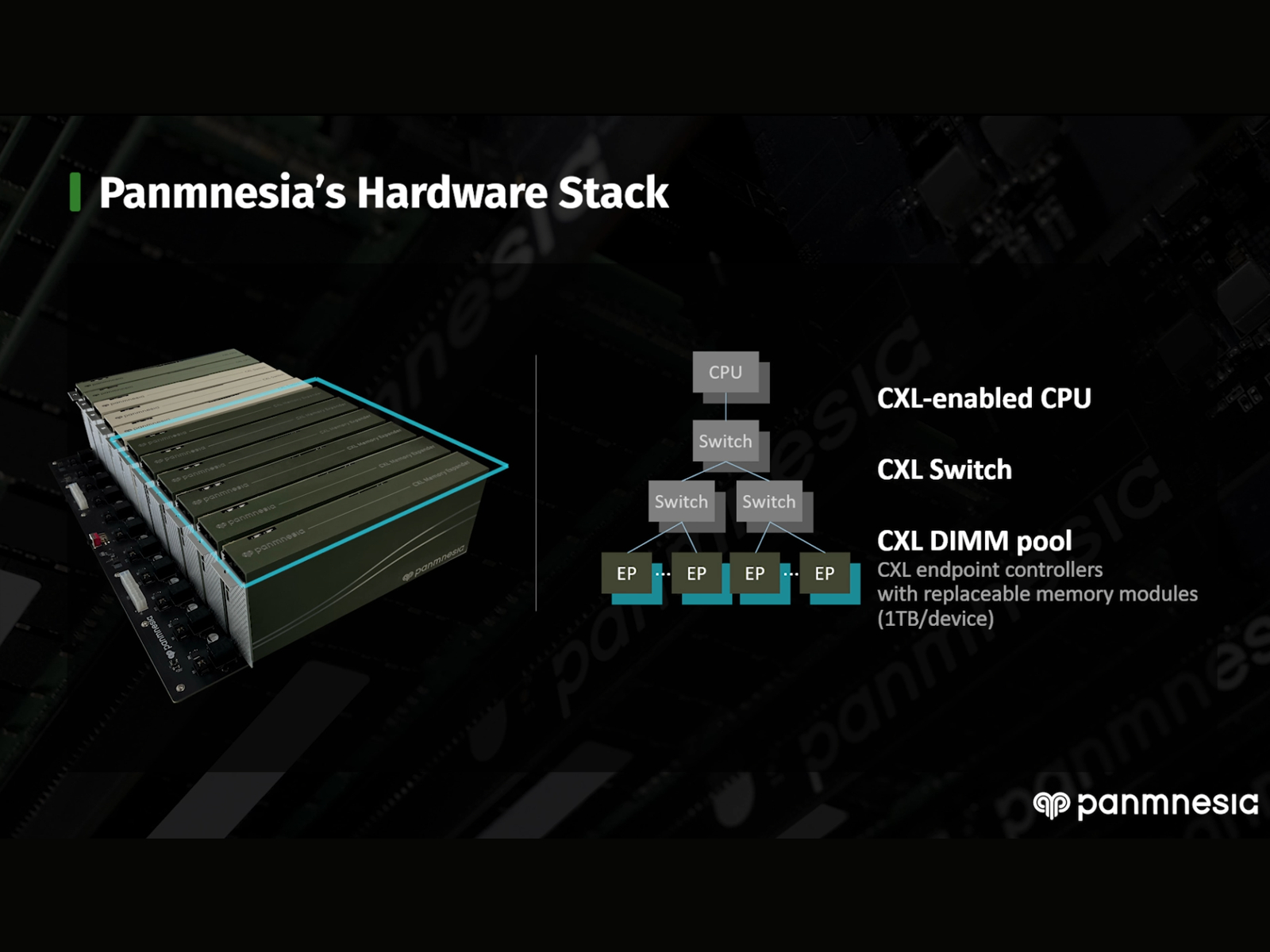

Panmnesia’s CXL framework solution, featuring the latest CXL 3.0 technologies, enables an infinite memory pool shared by multiple hosts through Port-based Routing(PBR) functionality. With our innovative solution, users can experience efficient data sharing across infinite memories. Panmnesia’s CXL all-in-one framework provides both CXL 3.0 hardware stack (CXL CPUs, switches, and DIMM pools) and its corresponding Linux-based CXL 3.0 software stack. Users can seamlessly apply their applications without modification and benefit from the advanced CXL 3.0 features within Panmnesia’s all-in-one framework. Therefore, for companies who are interested in CXL technology, this framework would be a best option to develop their own CXL hardware/software solutions.

Panmnesia will also showcase demonstration accelerating practical HPC applications via memory sharing feature of CXL 3.0. Join us to explore our framework and demo at booth 2253. You can find more detailed information about SC 23 exhibition at the link below.

CXL Consoritum operates a blog that highlights leading companies in the field of CXL technology. Recently, the CXL Consortium’s member spotlight blog featured Panmnesia.

The post provides a brief introduction to Panmnesia and outlines Panmnesia’s expertise. It also delves into Panmnesia’s perspective on CXL technology. Specifically, Panmnesia shares insights into the ideal use cases for CXL technology, the market segments that will benefit the most from it, and how CXL will reshape data center configurations. The post concludes by explaining how Panmnesia’s CXL technology can assist other companies in adapting to this emerging CXL landscape.

For the complete blog post, you can follow the link provided below.

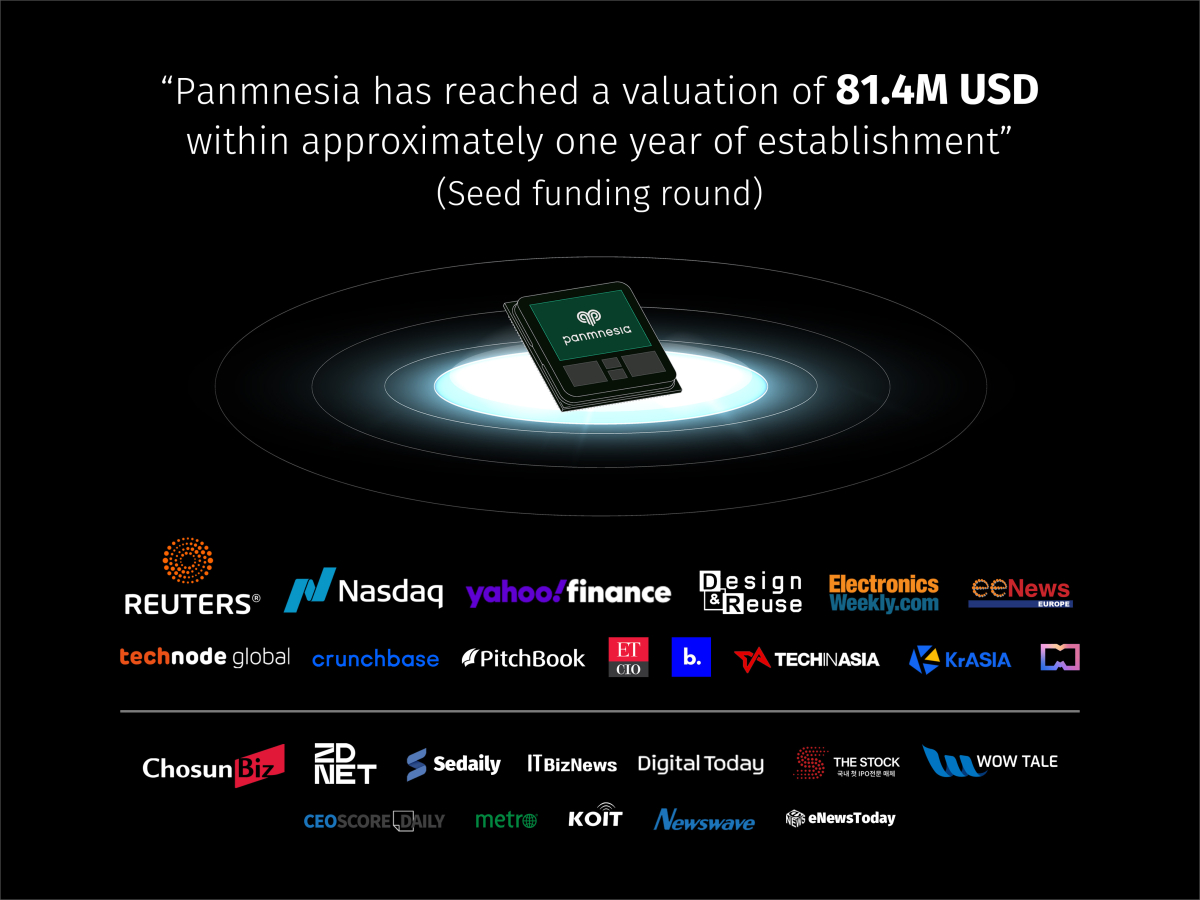

Recently, Panmnesia, a fabless semiconductor company focused on CXL (Compute Express Link) technology, proudly announced its successful completion of a seed funding round amassing $12.5 million (16 Billion KRW). This significant financial feat led to the company’s post-money valuation soaring to an impressive $81.4 million (103.4 Billion KRW). In the context of South Korea’s semiconductor sector, achieving such a hefty valuation in the seed round stage is indeed exceptional. Leading the investment charge was Daekyo Investment, and the round witnessed contributions from a consortium of seven esteemed investment entities including SL Investment, Smilegate Investment, GNTech Venture Capital, Time Works Investment, Yuanta Investment, and Quantum Ventures Korea.

Panmnesia’s machine learning-driven prefetching technology for CXL-SSD has been selected as KAIST Breakthroughs this year.

KAIST Breakthroughs is a webzine that selectively highlights innovative technologies across various engineering disciplines, encompassing electrical engineering, biotechnology, environmental engineering, and industrial engineering. In this year, KAIST Breakthroughs has highlighted 31 technologies, including Panmnesia’s pioneering work.

Panmnesia’s featured technology focuses on enhancing performance of systems that utilize CXL-SSDs. By utilizing the CXL interface, we can use SSD, which is commonly used as a block storage, as a huge memory device. The CXL-SSD harnesses host-side caching advantages while providing tremendous memory capacity. Therefore, it is garnering considerable attention from both industry and academia.

However, systems employing CXL-SSDs may encounter long latency of SSD, when cache miss occurs. Panmnesia has addressed this challenge by developing machine learning-based prefetching techniques capable of predicting future memory access information and reading it prior to the actual request. With an in-depth understanding of CXL-based system hierarchies, Panmnesia has crafted a solution that accurately forecasts future access addresses and determines the optimal prefetching timing.

If you are intrigued by this innovative work, you can access the original article featured in KAIST Breakthroughs through the following link.

Panmnesia, the global frontrunner in CXL IP (Intellectual Property) advancement, is proud to unveil its groundbreaking, self-developed multi-terabyte, full-system CXL framework. This exciting development was launched during the 2023 Flash Memory Summit, held August 8-10 at the Santa Clara Convention Center, California. The Summit, a major annual event attracting over 3,000 participants, unites leading companies from around the globe, all specializing in memory and storage solutions.

All set to explore the horizon of technological advancement at this year’s Flash Memory Summit (FMS)? Make sure you’ve got Panmnesia on your must-see list!

This year at FMS 23, we’re beyond excited to present our live demo, entitled “Experience Reality, Not Blueprints: Panmnesia’s Authentic, Multi-Terabyte CXL Memory Disaggregation Framework.”

We believe in the power of action over words, which is why we’re bringing you a fully-operational CXL solution. Panmnesia’s groundbreaking CXL system includes all the requisite hardware components, ranging from custom-designed CXL CPUs and switches, to our state-of-the-art DIMM pool devices.

Our robust framework enables multi-terabyte capacity by integrating a myriad of memory devices via multi-tiered CXL switches. We can’t wait to showcase how CXL technology can supercharge real-world applications. As a part of our demo at FMS 23, we’ll demonstrate a deep learning recommendation system operating in harmony with LinuxOS and Panmnesia’s innovative CXL framework. Plus, you can gain deeper insights from the other demo videos we’ll be sharing.

Intrigued by what real innovation looks like? Make sure to visit us at booth #655 during the Flash Memory Summit, and get up close with the future of technology, brought to life by Panmnesia!

Panmnesia is excited to announce our participation in the Flash Memory Summit (FMS) 2023, taking place in Santa Clara, California, US, from August 8th to August 10th. This premier event is a global platform for industry leaders and innovative thinkers to showcase and discuss the latest advancements in flash memory technology.

At this year’s FMS, Panmnesia will be in the spotlight with our groundbreaking full-system CXL (Compute Express Link) framework. This pioneering infrastructure consists of customized CXL CPUs, switches, and DIMM pools. Our innovation doesn’t stop there, though; we’ve designed the framework to offer multi-terabyte capacity by connecting a plethora of memory devices through multi-level CXL switches.

One of the standout features of our system is the incorporation of DIMM pooling technology. By strategically utilizing this technology, we’ve made it possible to achieve substantial cost reductions, all the while maintaining the system’s high performance and reliability.

Our team will demonstrate exactly how CXL technology can revolutionize real-world applications. Visitors will be treated to a live and captivating demo of recommendation systems running on a real deep learning framework with Linux OS. This example will provide a tangible glimpse into how our technologies can enhance and streamline systems in various sectors, from cloud computing to high-performance computing (HPC) to data centers and beyond.

For everyone interested in learning more about Panmnesia’s innovative technologies, we extend a warm invitation to visit us at booth 655 at the Flash Memory Summit. Our team will be on hand to share insights, answer questions, and explore potential collaborations. We look forward to meeting fellow industry enthusiasts and exploring the future of memory technology together at FMS 2023!

Panmnesia delivered an invited presentation (title: Breaking Down CXL: Why It’s Crucial and What’s Next) at the SIGARCH Korea Workshop 2023 held in SNU(Seoul National University).

During this presentation, we extensively dissected the ins and outs of Compute Express Link (CXL) technology, highlighting its relevance and the present status of its advancement.

CXL is a potent interconnect standard offering high-speed, low-latency communication between CPUs and diverse accelerators such as FPGAs and GPUs. It allows these devices to share memory space and maintain cache coherence, resulting in swift data access and efficient processing of intricate tasks. Our exploration of CXL began from its genesis, the CXL 1.0 specification, and continued through its successive iterations, including the recently launched CXL 3.0. We emphasized the unique features of each iteration, differences among them, and the advantages of CXL compared to other interconnect standards. Beyond just the technicalities of CXL, we also navigated its real-world impact. We specifically addressed how CXL is deployed in large-scale data centers and its role in fostering innovation in areas like artificial intelligence and machine learning. Overall, this talk served as a platform to share a thorough understanding of CXL technology, from its fundamental principles to its practical applications.

It was truly delightful discussing CXL technology with a number of professors and students who are passionate about computer architecture. Thank you for the invitation!

Panmnesia had the privilege of leading a talk at the OCP (Open Compute Project) APAC Tech Day, staged at COEX in Seoul.

Our presentation, “Memory Pooling over CXL and Go Beyond with AI,” highlighted the importance and fundamentals of CXL(Compute Express Link), along with its potential to propel large-scale AI applications forward.

Specifically, we first provided a comprehensive overview of CXL technology and examined why it matters. We then explored the different versions of CXL, starting with the original CXL 1.0 specification and its subsequent updates, including the recently released CXL 3.0. We also delved into the real-world implications of this technology, such as artificial intelligence and machine learning.

We’re incredibly grateful to our engaged audience for their interest and to the event organizers for hosting such a successful day. We look forward to participating in future OCP events!

Panmnesia will be delivering an invited talk at OCP(Open Compute Project) APAC Tech Day.

Our talk will provide a comprehensive overview of CXL(Compute Express Link) technology, from its foundational principles to its practical applications, such as machine learning.

We cordially invite you to join us at OCP APAC Tech Day on July 4th at COEX, Seoul. If you’re interested in delving into the intricacies of CXL technology and widening your understanding, this event is perfect for you.

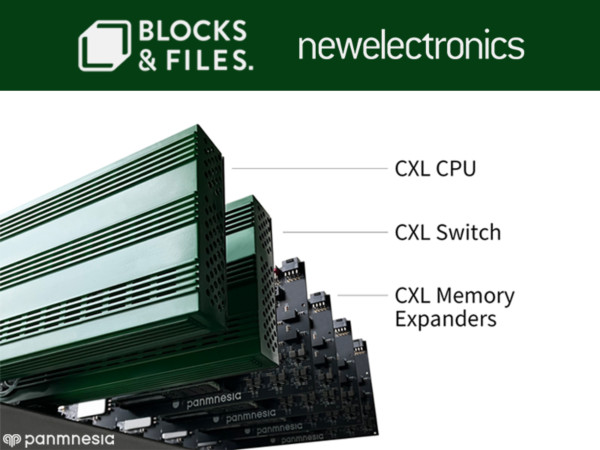

Panmnesia’s groundbreaking AI vector search solution has been highlighted in recent ‘Blocks and Files’ and ‘New Electronics’ articles. The core innovation of our CXL-augmented AI vector search solution is its ability to separate memory from the central processing unit, achieved through the implementation of CXL 3.0.

This strategic decoupling allows for substantial AI search data to be accommodated within the memory pool, potentially reaching a staggering 4 Petabytes per CPU’s root complex. Notably, our CXL-augmented AI vector search solution boosts the rate of query processing by a whopping 111.1 times, outstripping existing strategies, including those employed by Microsoft’s production service.

For an in-depth exploration of the AI vector search solution, please refer to the links below.

Panmnesia had the pleasure of being invited by Professor Byung-Gon Chun, the CEO of FriendliAI at Seoul National University, to present at his seminar. During our presentation, we shed light on two significant applications of the advanced Compute Express Link (CXL) technology.

Firstly, we discussed a combined software-hardware system that enhances search capabilities for approximate nearest neighbors. This solution uses CXL to separate memory from host resources and optimize search performance despite CXL’s distant memory characteristics. It employs innovative strategies and utilizes all available hardware, outperforming current platforms in query latency.

Secondly, we presented a robust system for managing large recommendation datasets. It harnesses CXL to integrate persistent memory and graphics processing units seamlessly, enabling direct memory access without software intervention. With a sophisticated checkpointing technique that updates model parameters and embeddings across training batches, we’ve seen a significant boost in training performance and energy savings.

We’re looking forward to more such opportunities! Keep an eye out for our future endeavors!

Panmnesia, Inc., the industry leader in data memory and storage solutions, is thrilled to announce that their cutting-edge SSD technology, Panmnesia’s ExPAND, has been accepted for presentation at the prestigious HotStorage 2023 conference, to be held in Boston this July.

Panmnesia’s ExPAND is a groundbreaking development that integrates Compute Express Link (CXL) with SSDs, allowing for scalable access to large memory. Although this technology currently operates at slower speeds than DRAMs, ExPAND mitigates this by offloading last-level cache (LLC) prefetching from the host CPU to the CXL-SSDs, which dramatically improves performance.

The cornerstone of Panmnesia’s ExPAND is its novel use of a heterogeneous prediction algorithm for prefetching, which ensures data consistency with CXL sub-protocols. The system also allows for detailed examination of prefetch timeliness, paving the way for more accurate latency estimations.

Furthermore, ExPAND’s unique feature lies in its awareness of CXL multi-tiered switching, providing end-to-end latency for each CXL-SSD, as well as precise prefetch timeliness estimations. This breakthrough reduces the reliance on CXL-SSDs and enables most data to access the host cache directly.

Early tests are already showing promise: Panmnesia’s ExPAND has been shown to enhance the performance of graph applications by a staggering 2.8 times, surpassing CXL-SSD pools with a variety of prefetching strategies.

ExPAND’s acceptance into HotStorage 2023 underscores its potential to revolutionize the way we manage and access data. With ExPAND, Panmnesia, Inc. continues to solidify its reputation as a frontrunner in data storage solutions, pushing the boundaries of technology and driving the industry forward.

The full details of ExPAND and its advantages will be presented at HotStorage 2023. Stay tuned for more exciting updates from the conference.

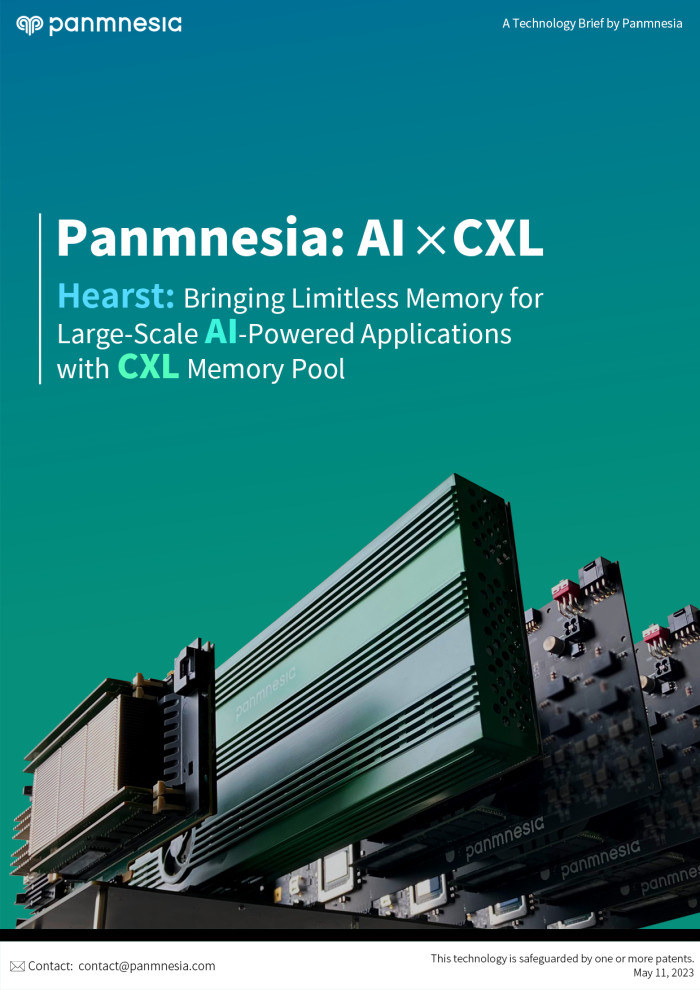

Interested in discovering the potential applications of the CXL solution?

We invite you to explore a recent white paper detailing Panmnesia’s CXL innovation, known as Hearst, which significantly enhances AI-driven applications (such as recommendation systems and vector search) through exceptional performance and cost efficiency.

In summary, Hearst’s distinguishing features include 1. port-based routing (PBR) CXL switch for high scalability, 2. near-Data processing for high performance, and 3. DIMM pooling technology for a low cost. We hope you find this information helpful and enjoyable to read.

We are thrilled to announce that Panmnesia’s groundbreaking research has been recognized by the esteemed USENIX ATC’23, boasting a competitive 18% acceptance rate.

Our study explores the acceleration of nearest neighbor search services, handling billion-point datasets through innovative CXL memory pooling and coherence network topology. This approach enables substantial memory requirements for ANNS, such as Microsoft’s search engines (Bing and Outlook) which demand over 40TB for 100B+ vectors, each described by 100 dimensions. Likewise, Alibaba’s e-commerce platforms require TB-scale memory for their 2B+ vectors (128 dimensions).

Join us in Boston this July to learn more about our exciting findings!

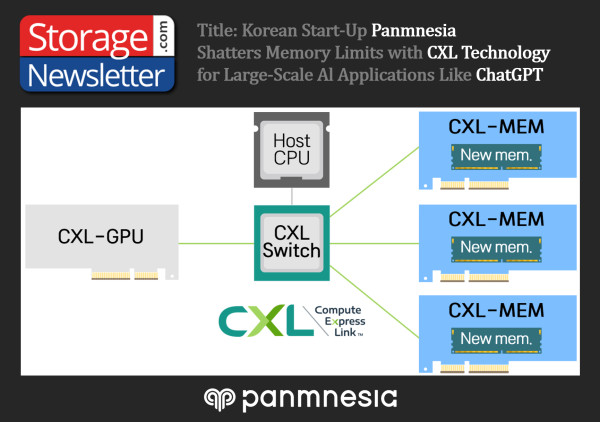

Get ready for a revolution in AI acceleration with Panmnesia’s CXL-augmented system - making waves in StorageNewsletter!

Our groundbreaking technology empowers GPUs to unleash a massive memory space of up to 4 Petabytes, supercharging the training of colossal machine learning models like recommendation systems and ChatGPT using just a few GPUs!

Embrace substantial cost savings and push the boundaries of AI capabilities for your business! Don’t miss out - explore our trailblazing tech via the following link

Panmnesia’s research team has developed an efficient machine learning framework designed for large-scale data training in datacenters, utilizing CXL 3.0 technology. Our recent achievements have been highlighted in Forbes, which can be accessed via the first link below.

To gain a deeper understanding of our CXL 3.0-based training system, we recommend watching our informative video presentation via the second link below. The video demonstrates how CXL can enhance large-scale machine learning acceleration and provides insights for ML and AI system designers to consider when implementing this technology.

We are excited to announce that our team will be showcasing this innovative system at the IPDPS ‘23 conference in Florida this May. We hope to see you there and look forward to discussing our advancements with you in person!

Our team has successfully expanded memory by over a dozen terabytes, making it the largest CXL (Compute Express Link) hardware and software solution in the world. It’s designed to work seamlessly with multi-CPUs, CXL switches, and CXL memory expanders, offering a versatile option that can be easily integrated into any tech setup.

We’re excited to share that we’ve successfully validated the system with the latest Linux full stack and have proven its capabilities in large-scale deep learning and machine learning-based recommendation systems. During our demonstration, we were able to assign all embedding features to the remote-side CXL memory expansion without the need for an assistant module. Our software and hardware IPs are seamlessly integrated with TensorFlow, which allows for effective management of training and servicing with ease.

We’re committed to pushing the boundaries of innovation and are eager to showcase the range of state-of-the-art, memory-intensive applications running on our system. We’re also excited to introduce an array of new hardware-architected devices in the pipeline. Stay connected for further updates on our exciting new innovations!

We’re open to exploring collaboration opportunities and would love to work with you. Please don’t hesitate to get in touch with us at [email protected] or [email protected] if you’re interested. Thanks for being a part of our journey!

Panmnesia, a KAIST start-up company that focuses on developing cache coherent interconnect (CCI) technologies using Compute Express Link (CXL), has garnered significant attention from the technology industry, particularly in the field of computing and memory. Seoul Finance recently published an article that highlights Panmnesia as the first developer of a real CXL system with both working hardware and software modules. The article includes an interview with Panmnesia’s CEO, who explains the significance of CXL and how it could impact various computing and memory industries.

CXL is a new cache coherent interconnect standard that supports multiple protocols and enables cache coherent communication between CPUs, memory devices, and accelerators. According to Panmnesia’s CEO, CXL is the most important technology in the next-generation memory semiconductor industry. The article explains that as the demand for high-performance and high-capacity memory semiconductor grows, CXL could be a viable solution for memory disaggregation, allowing memory resources to be efficiently and cost-effectively utilized.

The article also discusses the potential impact of CXL on artificial intelligence (AI) acceleration and ChatGPT (Conversational AI based on the GPT model). Panmnesia’s CEO believes that CXL can play a crucial role in AI acceleration by offering large-scale memory subsystems to existing processing silicon and co-processing accelerators such as GPU, DPU, and TPU. This will enable faster data processing and more efficient use of resources.

Panmnesia has already secured a significant number of intellectual property rights related to CXL, including the CXL standard IP, and is currently in talks with various domestic and international institutions for collaboration. Panmnesia aims to become the leading solution provider in the CXL industry and is focused on developing new and innovative CCI technologies to meet the demands of various industries.

We had the privilege of attending a dynamic CXL workshop, where we were invited to share our knowledge and expertise as a leading vendor in CPU and memory technology. The workshop brought together industry experts from renowned companies such as Intel, AMD, Samsung, SK-Hynix, and our own company, Panmnesia. We were thrilled to participate in this high-level discussion, which explored the latest trends and innovations in CXL technology and its impact on the future of large-scale data-centric applications.

As a participant in the workshop, we had the opportunity to give an invited talk and share our insights and perspectives on the direction that data-centric applications should take. We highlighted the importance of cutting-edge research directions such as near-data processing and AI acceleration, and how they can be leveraged to drive innovation and progress in this field.

The discussions throughout the workshop were engaging and thought-provoking, and we were impressed by the wealth of knowledge and expertise shared by the attendees. We gained valuable insights into the latest trends and innovations in CXL technology, which will undoubtedly shape our thinking and approach to data-centric applications in the future.

Overall, we were honored to have been a part of this workshop and grateful for the opportunity to share our knowledge and experience with other industry leaders. We look forward to continued engagement with CXL technology and its impact on the future of large-scale data-centric applications.

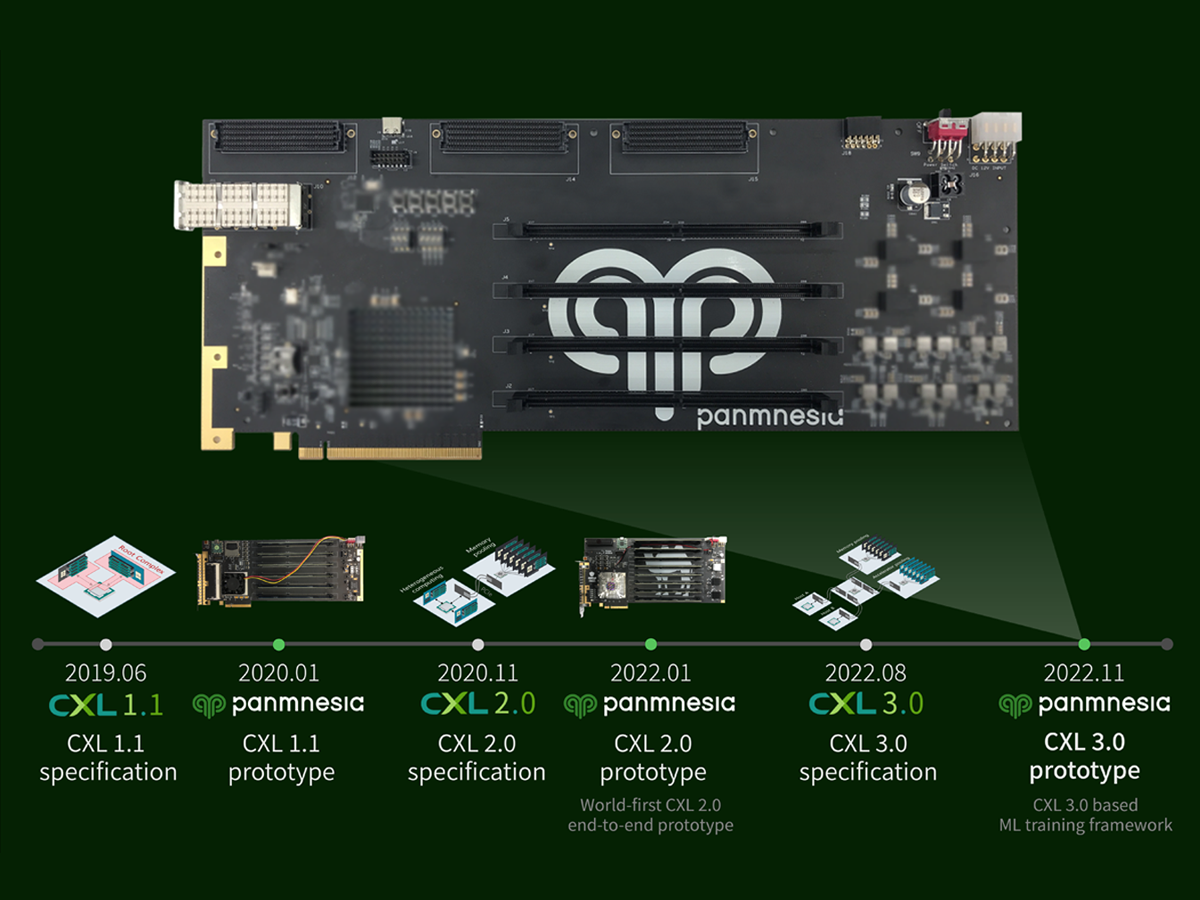

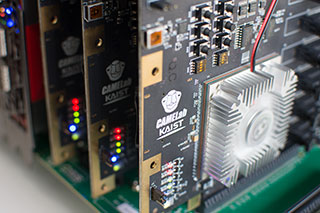

We are thrilled to share our recent participation in the prestigious Computer System Society 2023 conference, where we had the honor of delivering an invited talk entitled “Memory Pooling over CXL”. Our talk was met with great enthusiasm from attendees, as we presented our history of development and research for CXL and memory expander technology from 2015 to 2023, including some specific use-cases. We also introduced a cutting-edge memory pooling stack and showcased a CXL 3.0 memory expander device reference board that we built from the ground up.

As a leading vendor in CXL and memory technology, we are committed to driving innovation and progress in this field. We are particularly excited about our new memory pooling stack, which we believe has the potential to revolutionize the industry by providing a more efficient and cost-effective way to manage memory resources. Our CXL 3.0 memory expander device reference board is also a testament to our commitment to cutting-edge research and development, as we continue to push the boundaries of what is possible in this field.

In addition to our talk at the Computer System Society 2023 conference, we are also honored to be participating in a closed CXL working symposium later this month, alongside industry leaders such as AMD, Intel, Samsung, and SK-Hynix. We look forward to sharing more technical details about our latest developments and discussing our vision for the future of CXL technology and its impact on data-centric applications.

Overall, our participation in the Computer System Society 2023 conference was a tremendous success, and we are excited to continue to drive innovation and progress in this field. We remain committed to pushing the boundaries of what is possible in CPU and memory technology, and we look forward to sharing more updates and insights in the future.

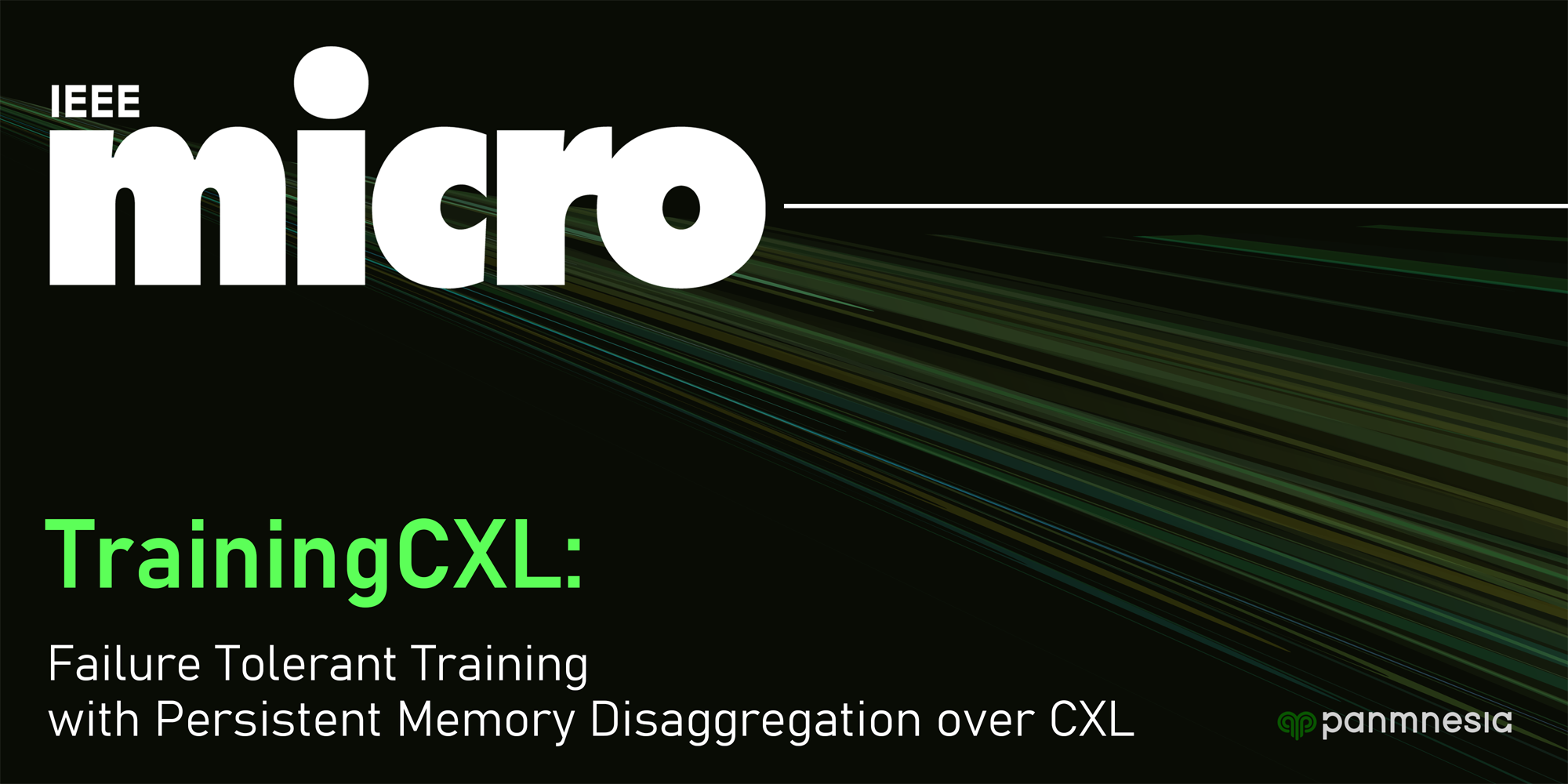

We are thrilled to announce the publication of our latest research paper, which proposes a cutting-edge solution called TrainingCXL for efficiently processing large-scale recommendation datasets in the pool of disaggregated memory. The paper, which will soon be available in IEEE Micro Magazine, introduces several innovative techniques that make training fault-tolerant with low overhead.

TrainingCXL integrates persistent memory (PMEM) and GPU into a cache-coherent domain as Type 2, which enables PMEM to be directly placed in GPU’s memory hierarchy, allowing GPU to access PMEM without software intervention. The solution introduces computing and checkpointing logic near the CXL controller, thereby actively managing persistency and training data. Considering PMEM’s vulnerability, TrainingCXL utilizes the unique characteristics of recommendation models and takes the checkpointing overhead off the critical path of their training. Lastly, the solution employs an advanced checkpointing technique that relaxes the updating sequence of model parameters and embeddings across training batches.

The evaluation of TrainingCXL shows that it achieves a remarkable 5.2x training performance improvement and 76% energy savings, compared to modern PMEM-based recommendation systems. These results demonstrate the potential of TrainingCXL to revolutionize the field of large-scale recommendation datasets by providing a more efficient, cost-effective, and fault-tolerant solution.

We are proud to have developed this innovative solution and excited to share it with the community. This research builds on our commitment to pushing the boundaries of what is possible in CPU and memory technology, and we look forward to continued innovation and progress in this field.

We are excited to announce the publication of our latest research paper in IEEE Micro Magazine, which proposes a groundbreaking solution for memory disaggregation using Compute Express Link (CXL). CXL has recently garnered significant attention in the industry due to its exceptional hardware heterogeneity management and resource disaggregation capabilities. While there is currently no commercially available product or platform integrating CXL into memory pooling, it is widely expected to revolutionize the way memory resources are practically and efficiently disaggregated.

Our paper introduces the concept of directly accessible memory disaggregation, which establishes a direct connection between a host processor complex and remote memory resources over CXL’s memory protocol (CXL.mem). This innovative approach overcomes the limitations of traditional memory disaggregation techniques, such as high overhead and low efficiency. By utilizing CXL.mem, we achieve a highly efficient, low-latency, and high-bandwidth memory disaggregation solution that has the potential to revolutionize the industry.

The evaluation of our solution demonstrates its remarkable performance, as it achieves a significant reduction in memory access latency and a substantial increase in bandwidth compared to traditional disaggregation techniques. These results demonstrate the potential of directly accessible memory disaggregation over CXL as a game-changing solution for future memory systems.

We are proud to have developed this innovative solution and excited to share it with the community. This research builds on our commitment to pushing the boundaries of what is possible in CPU and memory technology, and we look forward to continued innovation and progress in this field.

We were honored to give a keynote address at the Process-in-Memory and AI-Semiconductor Strategic Technology Symposium 2022, hosted by two major ministries in Korea. Our talk, entitled “Why CXL?”, was a deep dive into the unique capabilities and potential of Compute Express Link (CXL) technology, and explored why it is poised to be one of the key technologies in hyper-scale computing.

As a leading vendor in CPU and memory technology, we are committed to driving innovation and progress in this field, and we were thrilled to share our knowledge and experience with the attendees at the symposium. Our keynote address offered several insights into the benefits of CXL, as well as the differences between CXL 1.1, 2.0, and 3.0, providing a comprehensive understanding of this groundbreaking technology.

In contrast to our distinguished lecture at SC’22, which focused on heterogeneous computing over CXL, this keynote honed in on CXL memory expanders and the corresponding infrastructure that most memory/controller vendors are currently missing. We discussed the challenges that vendors face in adopting this technology, and shared our expertise on how to overcome them. Our talk was well-received by attendees, and we were honored to have had the opportunity to share our insights and perspectives on the future of CXL technologies.

As a company, we remain committed to pushing the boundaries of what is possible in CPU and memory technology, and we believe that CXL has the potential to revolutionize the industry. We welcome any inquiries or questions about CXL technology, and we look forward to continued engagement with industry leaders and experts. We are excited to see how this technology will shape the future of hyper-scale computing and data-centric applications, and we are dedicated to driving progress and innovation in this field.

We are thrilled to share our recent experience at SC’22, where we had the opportunity to engage with industry leaders and experts in the field of high-performance computing (HPC) and Compute Express Link (CXL) technology. Our participation in the CXL consortium co-chair meeting, which was led by AMD and Intel, was a highlight of the event. The panel meeting, which included representatives from Microsoft, Intel, and LBNL, was also an excellent opportunity to discuss the challenges and opportunities that HPC needs to address in order to adopt CXL technology.

Our distinguished lecture at SC’22 was a great success, where we demonstrated the entire design of the CXL switch and a CXL 3.0 system integrating true composable architecture. We showcased a new opportunity to connect all heterogeneous computing systems and HPC, including multiple AI vendors and data processing accelerators, and integrate them into a single pool. Our presentation also highlighted the capabilities of CXL 3.0 as a rack-scale interconnect technology, including features such as back-invalidation, cache coherence engine, and fabric architecture with CXL.

We are excited about the potential of CXL technology to revolutionize the industry and provide more efficient and effective solutions for HPC. We hope to have the opportunity to share more about our vision and CXL 3.0 prototypes at future events and venues in the near future. As a company, we remain committed to driving innovation and progress in CPU and memory technology, and we look forward to continued engagement with industry leaders and experts in this field.

Compute Express Link (CXL) technology has been generating significant attention in recent years, thanks to its exceptional hardware heterogeneity management and resource disaggregation capabilities. While there is currently no commercially available product or platform integrating CXL 2.0/3.0 into memory pooling, it is expected to revolutionize the way memory resources are practically and efficiently disaggregated.

In our upcoming distinguished lecture, we will delve into why existing computing and memory resources require a new interface for cache coherence and explain how CXL can put different types of resources into a disaggregated pool. We will showcase two real system examples, including a CXL 2.0-based end-to-end system that directly connects a host processor complex and remote memory resources over CXL’s memory protocol, and a CXL-integrated storage expansion system prototype.

In addition to these real-world examples, we will also introduce a set of hardware prototypes designed to support the future CXL system (CXL 3.0) as part of our ongoing project. We are excited to share our expertise and insights with attendees, and we hope to inspire others to push the boundaries of what is possible in CPU and memory technology.

Our distinguished lecture will offer a comprehensive understanding of the capabilities and potential of CXL technology, and we are excited to share our vision with the community. We are committed to driving innovation and progress in this field and believe that CXL has the potential to revolutionize the industry. We encourage attendees to join us at the event in Texas, Dallas, this November, and we look forward to engaging with industry leaders and experts in the field. The program for the event will be available soon, so stay tuned for more details!

We are excited to announce that our team has been invited to demonstrate two hot topics related to CXL memory disaggregation and storage pooling at the CXL Forum 2022, one of the hottest sessions at Flash Memory Summit. This session, led by the CXL Consortium and MemVerge, brings together industry leaders and experts to discuss the latest updates and advancements in CXL technology. We will be joining other leading companies and institutions, including ARM, Lenovo, Kioxia, and the University of Michigan TPP team, among others.

Our sessions will take place on August 2nd at 4:40 PM PT, and we are eager to share our insights and expertise with attendees. In the first session, entitled “CXL-SSD: Expanding PCIe Storage as Working Memory over CXL,” we will argue that CXL is a cost-effective and practical interconnect technology that can bridge PCIe storage’s block semantics to memory-compatible byte semantics. We will discuss the mechanisms that make PCIe storage impractical for use as a memory expander and explore all the CXL device types and their protocol interfaces to determine the best configuration for expanding the host’s CPU memory.

In the second session, we will demonstrate our CXL 2.0 end-to-end system, which includes the CXL network and memory expanders. This system showcases our expertise in CXL technology and provides attendees with a firsthand look at the potential of this groundbreaking technology.

We are honored to have the opportunity to participate in the CXL Forum 2022 and to share our insights and expertise with industry leaders and experts. We encourage attendees to check out the detailed information on the session and how to register via Zoom through the link provided. We look forward to engaging with the community and pushing the boundaries of what is possible in CPU and memory technology.

The Compute Express Link (CXL) protocol has been making waves in the computing industry, with various organizations exploring its potential to create tiered, disaggregated, and composable main memory for systems. While hyperscalers and cloud builders have been the early adopters of this technology, high-performance computing (HPC) centers are also starting to show interest.

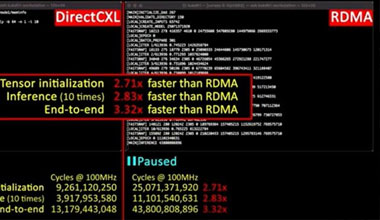

The Korea Advanced Institute of Science and Technology (KAIST) has been working on a promising solution called DirectCXL, which enables memory disaggregation and composition using the CXL 2.0 protocol atop the PCI-Express bus and a PCI-Express switching complex. Recently, DirectCXL was showcased in a paper presented at the USENIX Annual Technical Conference and discussed in a brochure and a short video.

This solution promises to revolutionize the field of HPC, offering practical and cost-effective solutions for memory expansion. DirectCXL utilizes the Transparent Page Placement protocol and Chameleon memory tracking to create tiered, disaggregated, and composable main memory for systems, similar to other projects like Meta Platforms’ Transparent Page Placement protocol and Microsoft’s zNUMA memory project.

We are pleased to announce that Panmnesia, Inc has acquired the intellectual property rights for DirectCXL from KAIST. As a company, we are excited to see the progress being made in the field of memory disaggregation and composition, particularly in the HPC space. We believe that CXL has the potential to drive innovation and progress in CPU and memory technology, and we are committed to leveraging this technology to create groundbreaking solutions for our customers.

Stay tuned for more updates and developments as we continue to explore the potential of CXL and DirectCXL.

We are proud to announce that our cutting-edge Compute Express Link (CXL) based solid-state drives (CXL-SSD) have been successfully demonstrated at the 2022 ACM Workshop on Hot Topics in Storage and File Systems (HotStorage’22). The workshop, which focuses on research and developments in storage systems, was a perfect opportunity to showcase the innovative capabilities of our CXL-SSD.

CXL is an open multi-protocol method that supports cache coherent interconnect for various processors, accelerators, and memory device types. While it primarily manages data coherency between CPU memory spaces and memory on attached devices, we believe that it can also be useful in transforming existing block storage into cost-efficient, large-scale working memory.

Our presentation at HotStorage’22 examined three different sub-protocols of CXL from a memory expander viewpoint. We suggested the device type that can be the best option for PCIe storage to bridge its block semantics to memory-compatible, byte semantics. Additionally, we discussed how to integrate a storage-integrated memory expander into an existing system and speculated on its impact on system performance. Lastly, we explored various CXL network topologies and the potential of a new opportunity to efficiently manage the storage-integrated, CXL-based memory expansion.

Our demonstration at HotStorage’22 highlighted the significant advancements in CXL technology and how it can transform storage and memory systems. CXL-SSD is a revolutionary solution that enables large-scale working memory to be created cost-effectively by leveraging existing block storage. This breakthrough will revolutionize storage and memory systems, particularly in the context of high-performance computing.

Panmnesia, Inc is committed to developing innovative solutions that leverage the potential of CXL technology. Our CXL-SSD is just one example of the groundbreaking solutions that we are creating to meet the needs of our customers. We believe that CXL is the future of CPU and memory technology, and we are excited to be at the forefront of this exciting new frontier.

Stay tuned for more updates as we continue to explore the potential of CXL technology and its impact on storage and memory systems.

We are thrilled to share that our team has successfully proposed and demonstrated a new cache coherent interconnect called DirectCXL, which directly connects a host processor complex and remote memory resources over CXL’s memory protocol (CXL.mem). In a paper published in USENIX ATC, we explore several practical designs for CXL-based memory disaggregation and make them real, offering a new approach to utilizing memory resources that is expected to be more efficient than ever before.

DirectCXL eliminates the need for data copies between the host memory and remote memory, which allows for the true performance of remote-side disaggregated memory resources to be exposed to the users. Additionally, we have created a CXL software runtime that enables users to utilize the underlying disaggregated memory resources through sheer load/store instructions, as there is no operating system currently supporting CXL.

Our work has been recognized with an acceptance rate of only 16% at USENIX ATC, a testament to the significance of our proposal and its potential impact on the industry. We are excited to continue our research and development in this area and look forward to sharing more updates in the near future.

News (Korean):

As the big data era arrives and data-intensive workloads continue to proliferate, resource disaggregation has become an increasingly important area of research and development. By separating processors and storage devices from one another, disaggregated resource architectures can offer greater scale-out capabilities, increased cost efficiency, and transparent elasticity, breaking down the physical boundaries that have traditionally constrained data center and high-performance computing environments.

However, achieving memory disaggregation that supports high performance and scalability with low cost is a non-trivial task. Many industry prototypes and academic studies have explored a wide range of approaches to realize memory disaggregation, but to date, the concept has yet to be fully realized due to several fundamental challenges.

That’s where our team at Panmnesia (KAIST startup) comes in. We have developed a world-first proof-of-concept CXL solution that directly connects a host processor complex and remote memory resources over the computing express link (CXL) protocol. Our solution framework includes a set of CXL hardware and software IPs, such as a CXL switch, processor complex IP, and CXL memory controller, which completely decouple memory resources from computing resources and enable high-performance, fully scale-out memory disaggregation architectures.

Our CXL solution framework provides several key benefits over traditional memory disaggregation approaches. For example, it enables greater resource utilization and efficiency by making it possible to more easily share and allocate memory resources across multiple nodes. Additionally, our framework can support greater scalability, allowing organizations to expand their memory resources as their needs grow without incurring significant costs or disruptions.

Overall, we believe that our CXL solution represents a major breakthrough in the field of resource disaggregation and memory disaggregation in particular. By providing a more efficient, cost-effective, and scalable approach to memory disaggregation, we are helping organizations to fully leverage the power of their data-intensive workloads and realize the full potential of their computing environments.

News (Korean):