Panmnesia Introduces Today’s and Tomorrow’s AI Infrastructure, Including a Supercluster Architecture That Integrates NVLink, UALink, and HBM via CXL

Share

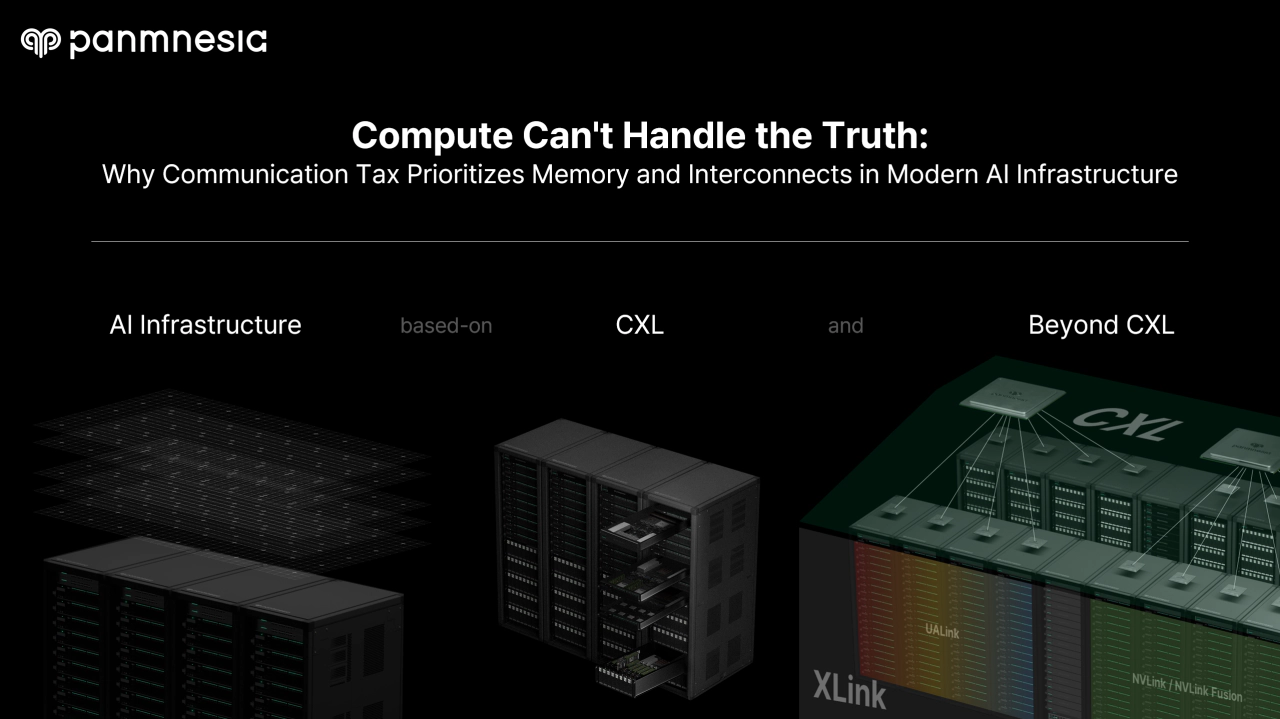

Panmnesia has released a technical report titled “Compute Can’t Handle the Truth: Why Communication Tax Prioritizes Memory and Interconnects in Modern AI Infrastructure.” In this report, Panmnesia outlines the trends in modern AI models, the limitations of current AI infrastructure in handling them, and how emerging memory and interconnect technologies—including Compute Express Link (CXL), NVLink, Ultra Accelerator Link (UALink), and High Bandwidth Memory (HBM)—can be leveraged to improve AI infrastructure.

Panmnesia aims to address the current challenges in AI infrastructure, by building flexible, scalable, and communication-efficient architecture using diverse interconnect technologies, instead of fixed GPU-based configurations.

Panmnesia’s CEO, Dr. Myoungsoo Jung, explained, “This technical report was written to more clearly and accessibly share the ideas on AI infrastructure that we presented during a keynote last August. We aimed to explain AI and large language models (LLMs) in a way that even readers without deep technical backgrounds could understand. We also explored how AI infrastructure may evolve in the future, considering the unique characteristics of AI services.” He added, “We hope this report proves helpful to those interested in the field.”

Overview of the Technical Report

Panmnesia’s technical report is divided into three main parts:

- Trends in AI and Modern Data Center Architectures for AI Workloads

- CXL Composable Architectures: Improving Data Center Architecture using CXL and Acceleration Case Studies

- Beyond CXL: Optimizing AI Resource Connectivity in Data Center via Hybrid Link Architectures (CXL-over-XLink Supercluster)

Part 1. Trends in AI and Modern Data Center Architectures for AI Workloads

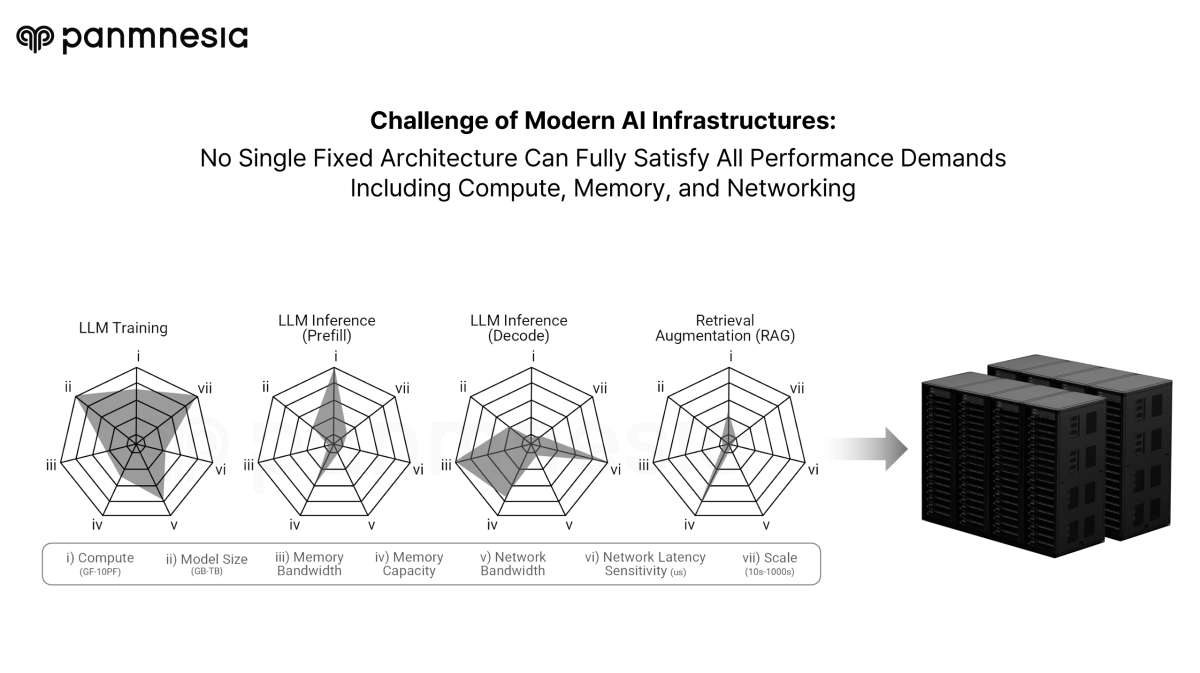

AI applications based on sequence models—such as chatbots, image generation, and video processing—are now widely integrated into everyday life. This technical report begins with an overview of sequence models, their underlying mechanisms, and the evolution from recurrent neural networks (RNNs) to large language models (LLMs). It then explains how current AI infrastructures handle these models and discusses their limitations. In particular, Panmnesia identifies two major challenges in modern AI infrastructures: (1) communication overhead during synchronization and (2) low resource utilization resulting from rigid, GPU-centric architectures.

Part 2. CXL Composable Architectures: Improving Data Center Architecture Using CXL and Acceleration Case Studies

To address the aforementioned challenges, Panmnesia proposes a solution built on CXL, an emerging interconnect technology. The report offers a thorough explanation of CXL’s core concepts and features, emphasizing how it can minimize unnecessary communication through automatic cache coherence management and enables flexible resource expansion—ultimately addressing key challenges of conventional AI infrastructure.

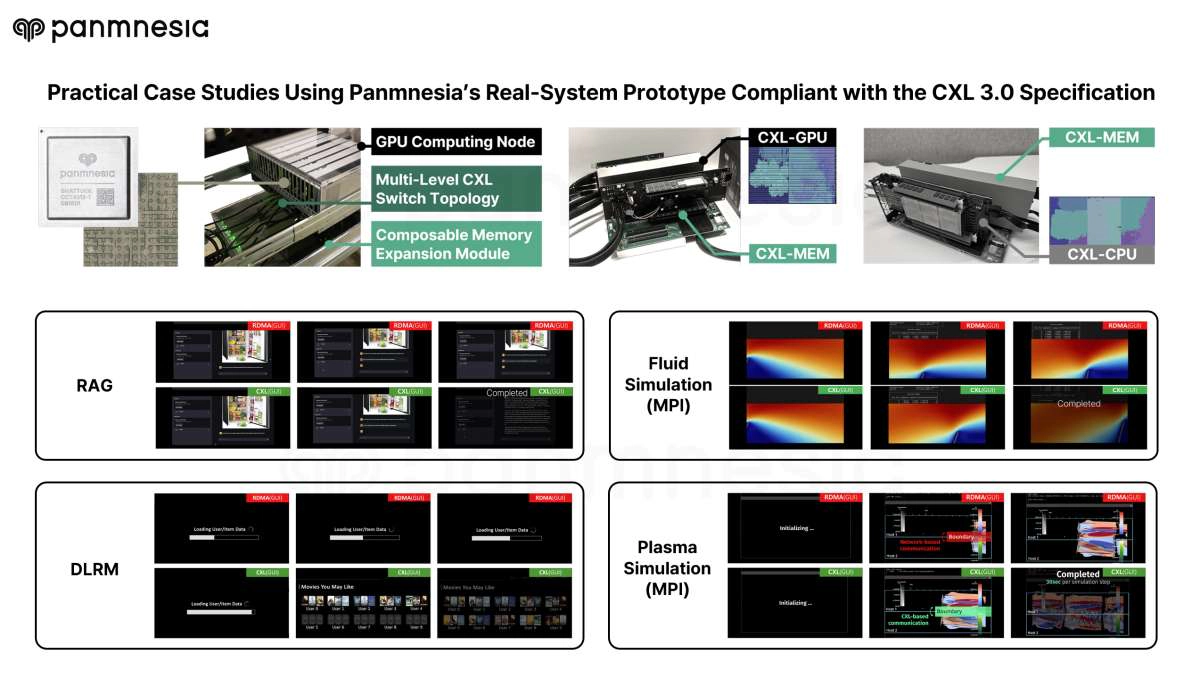

Panmnesia also introduces its CXL 3.0-compliant real-system prototype developed using its core technologies, including CXL IPs and CXL Switches. The report then shows how this prototype has been applied to accelerate real-world AI applications—such as RAG and deep learning recommendation models (DLRM)—demonstrating the practicality and effectiveness of CXL-based infrastructure.

Part 3. Beyond CXL: Optimizing AI Resource Connectivity in Data Center via Hybrid Link Architectures (CXL-over-XLink Supercluster)

This technical report is not limited to CXL alone. Panmnesia goes further by proposing methods to build more advanced AI infrastructure through the integration of diverse interconnect technologies alongside CXL.

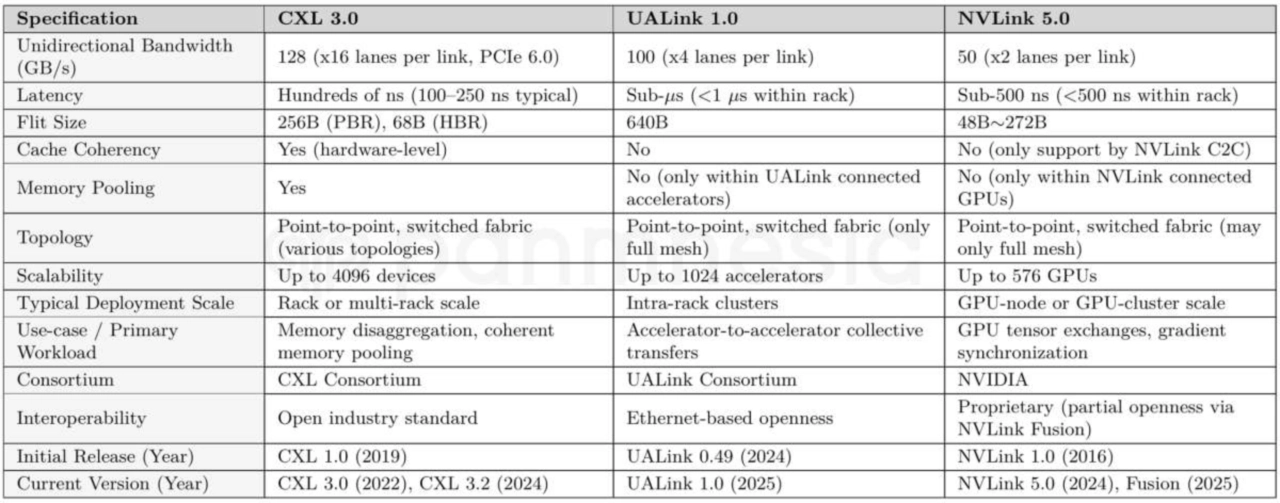

At the core of this approach is the CXL-over-XLink supercluster architecture, which uses CXL to enhance scalability, compatibility, and communication efficiency across clusters connected via accelerator-centric interconnects—collectively referred to as XLink—including UALink, NVLink, and NVLink Fusion. The report explains how the integration of these interconnect technologies enables an architecture that combines the advantages of each. It then concludes with a discussion on the practical application of emerging technologies such as HBM and silicon photonics.

Conclusion

With the release of this technical report, Panmnesia reinforces its leadership in next-generation interconnect technologies such as CXL and UALink. In parallel, the company continues to actively participate in various consortia related to AI infrastructure, including the CXL Consortium, UALink Consortium, PCI-SIG, and the Open Compute Project. Recently, Panmnesia also unveiled its “link solution” product lineup, designed to realize its vision for next-generation AI infrastructure and further strengthen its brand identity. Dr. Myoungsoo Jung, CEO of Panmnesia, stated, “We will continue to lead efforts to build better AI infrastructure by developing diverse link solutions and sharing our insights openly.”

The full technical report on AI infrastructure is available on 'Publication' page of Panmnesia's website.