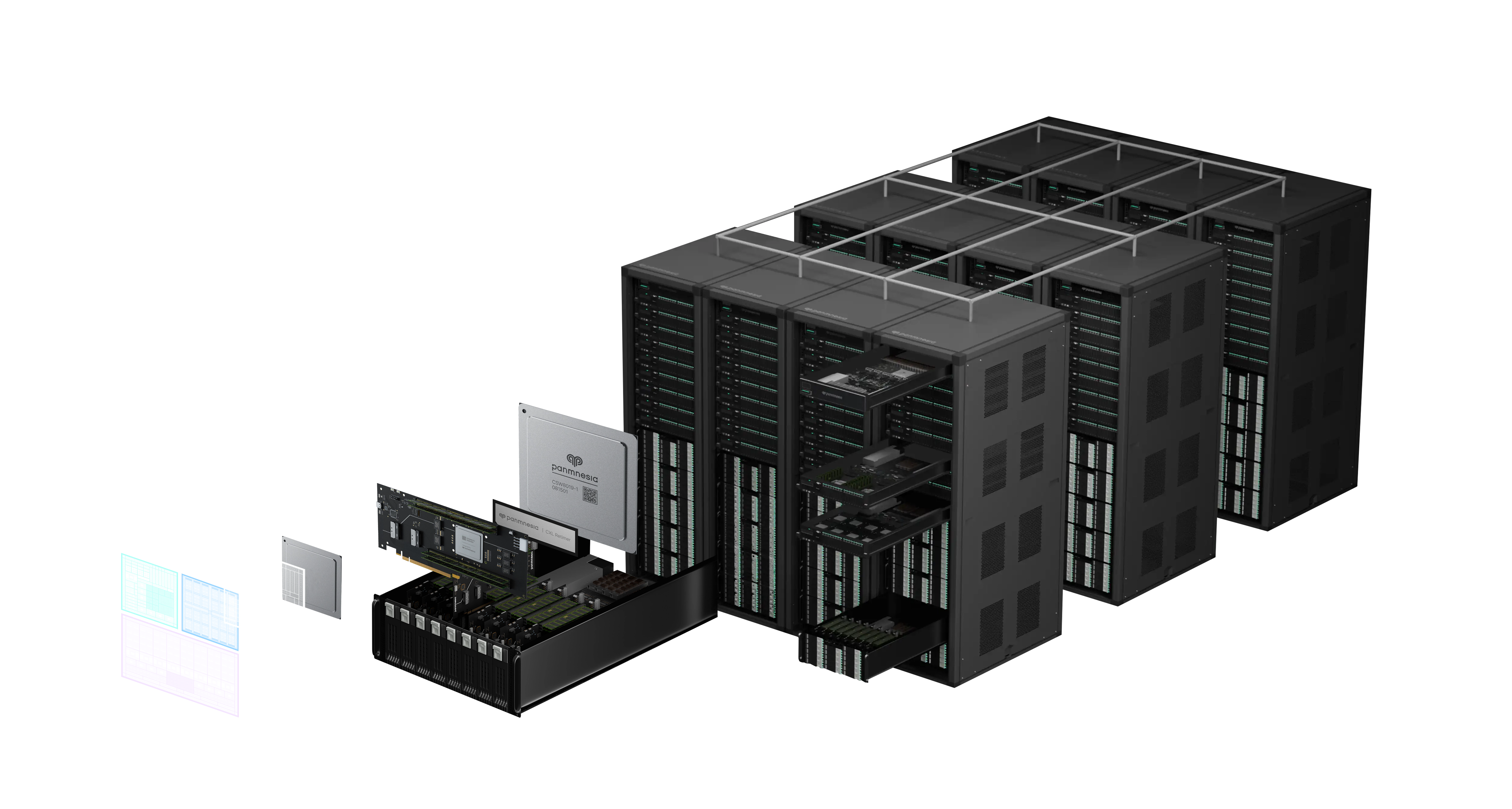

World's First CXL 3.2/PCIe 6.4 Switch for AI Infrastructure

PanSwitch is a next-generation CXL 3.2/PCIe 6.4 switch built to interconnect thousands of memory, compute, and accelerator devices into a unified, composable fabric. Featuring high fan-out architecture, sub-100ns latency, and full CXL protocol support, PanSwitch enables seamless resource sharing and interoperability across disaggregated infrastructure - making it ideal for large-scale AI, HPC, and cloud deployments.

Key Benefits

World's Highest Fanout (256 lanes)

Easily scale your system with 256-lane switch. This high-fanout allows the system to connect numerous GPUs, xPUs, CPUs, and memory devices, while allocating enough bandwidth for each device.

World's First CXL Fabric Support

Intelligent network reconfiguration system with support for multiple topology patterns, real-time load balancing, and automated resource optimization for complex data center environments.

Double-Digit Nanosecond Latency

Built on our latency-optimized CXL IPs, our CXL switch achieves double-digit nanosecond latency, enabling memory expansion with minimal performance overhead and lowering AI infrastructure costs.

Key Features

Our product in more details

Specification | CXL 3.2 Backward compatible with previous versions of CXL and PCIe |

Number of lanes | 256 |

Data rate | 64 GT/s |

Bifurcation Support | x4, x8, x16 |

Supported subprotocols | CXL.io, CXL.mem, CXL.cache Unordered IO support Back-invalidation support Direct P2P support |

Supported device types | CXL type 1/2/3 device support |

CXL fabric features | Switch cascading (multi-level switching) Tree and non-tree topology supported |

Supported flit format | 68B, 256B, 256B standard, 256B LOpt support |

RAS features | Hot-Plug support Data poisoning support Viral support |

Power management | Low power state support |

Peripherals | UART, I2C, GPIO available |

All specifications are subject to change without notice. Performance may vary based on system configuration and usage patterns.

Fabric Manager

PanSwitch comes with a fabric manager that fully supports CXL 3.2 fabric manager API. By utilizing the fabric manager, users can configure, manage, and monitor the switch, ensuring seamless deployment.

Device identification

Fabric discovery

Resource allocation

QoS telemetry support

Connectivity management

Security management

Device health management

Real-world Use Cases

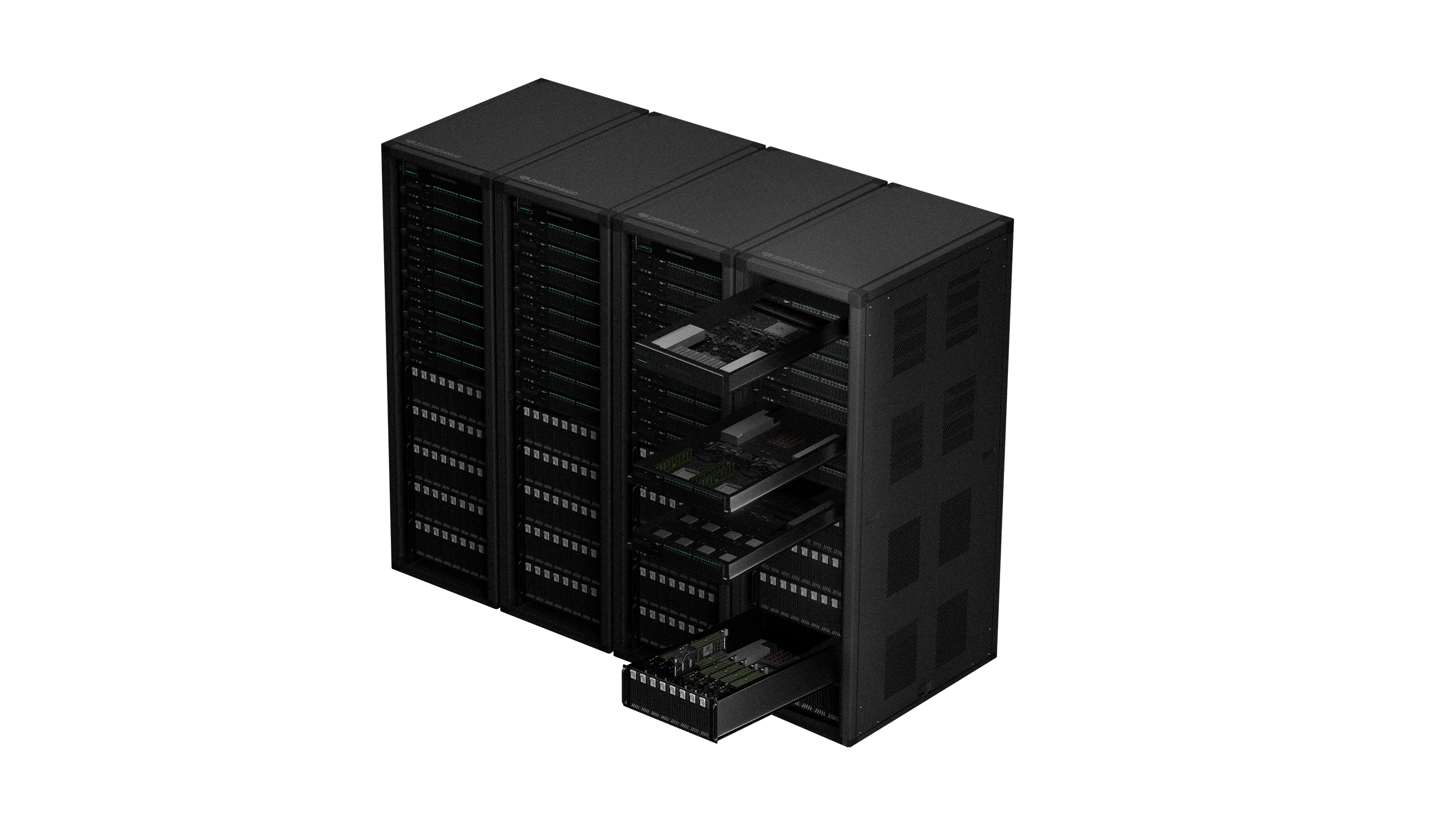

AI Training Infrastructure

Accelerate machine learning workloads with high-bandwidth memory expansion and GPU interconnects.

Ideal for large-scale AI training environments requiring massive memory capacity and ultra-low latency communication between accelerators.

Data Center Expansion

Scale your data center infrastructure with flexible CXL device connectivity and memory disaggregation.

Enables dynamic resource allocation and improves overall system efficiency in enterprise environments with growing computational demands.

HPC Cluster Computing

Connect thousands of compute nodes with ultra-low latency for high-performance computing workloads.

Ideal for scientific computing, simulation, and research applications requiring massive parallel processing and fast inter-node communication.