Optimal Network Layout for AI Workloads, Co-designed with Link Technology

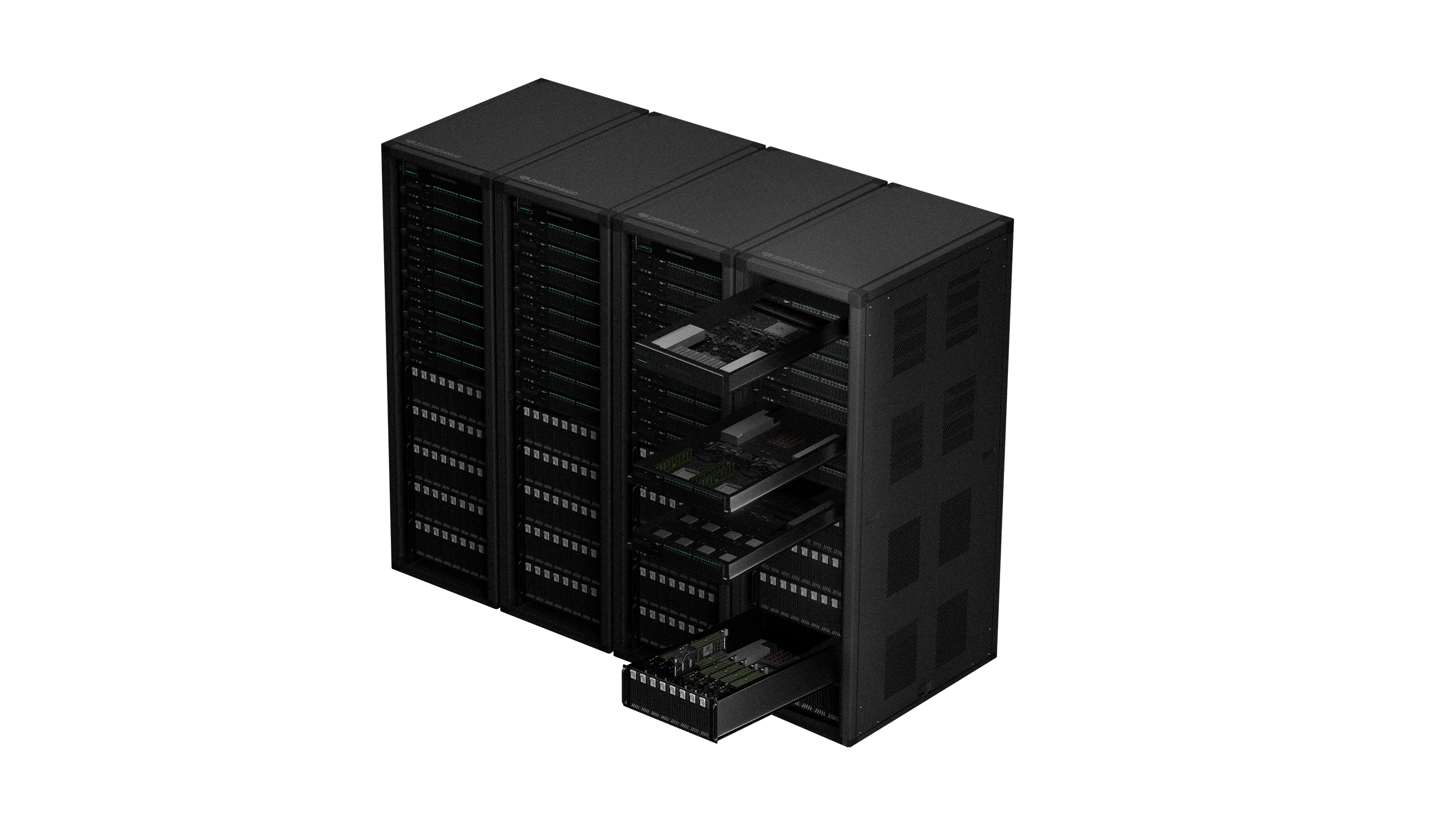

PanFabric is a next-generation network design and management solution co-designed with advanced link technologies. Tailored to your demanding workloads, PanFabric connects system devices with cutting-edge solutions such as optimized network topologies and traffic engineering, delivering significant performance boost for large-scale AI/HPC execution.

Key Benefits

Ultra-High Scalability

Scale your fabric to meet infrastructure demand. By leveraging 256-lane switches, PanFabric offers high-speed connection for tens of thousands of system devices.

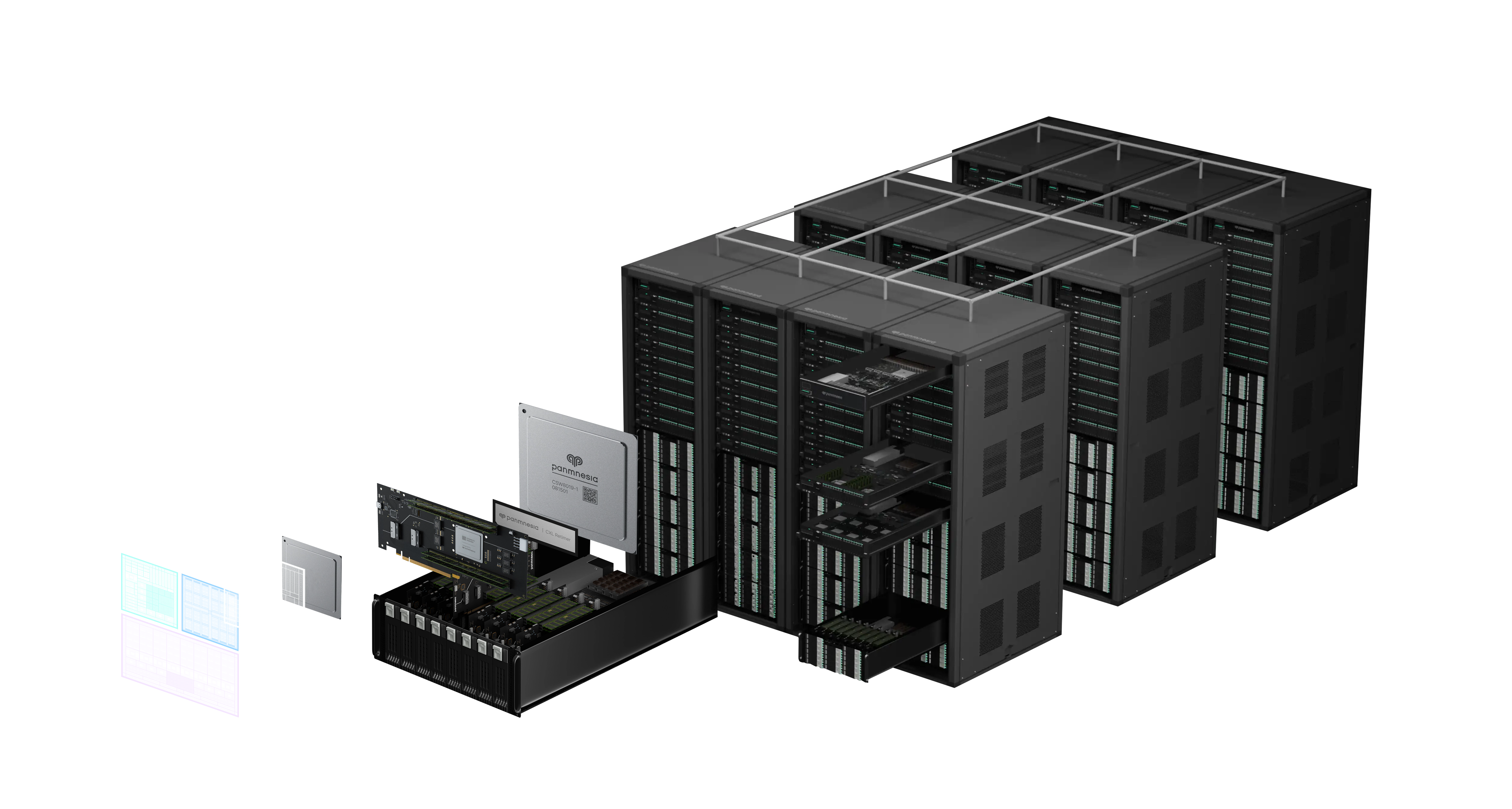

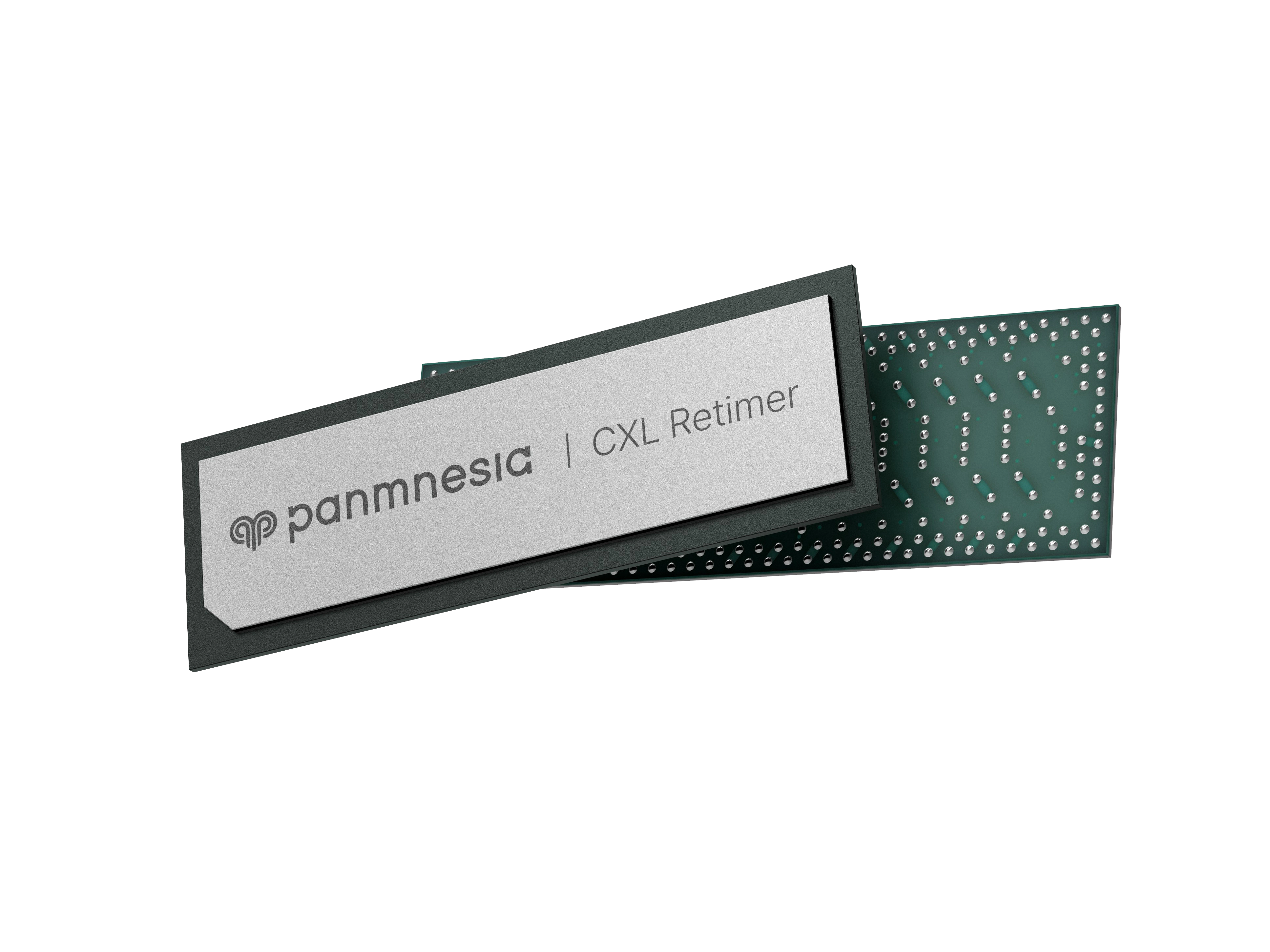

Disaggregating Compute and Memory

Enable true composability in AI infrastructure. PanFabric’s high-speed cache-coherent link technology allows seamless expansion, pooling, and sharing of compute and memory resources.

Holistic Optimizations at Fabric-Level

Maximize performance with Panmnesia’s proprietary fabric-level optimization solution. PanFabric ensures low tail latencies and high throughput for the network-intensive distributed AI/HPC applications.

Advanced Fabric Manager

PanFabric includes an advanced fabric manager that intelligently orchestrates the disaggregated fabric. In response to application demand, it allocates the optimal compute and memory resources from available candidates across the fabric and seamlessly connects them via high-speed links. The fabric manager also ensures reliable, large-scale operation by monitoring device status in real time.

Device identification

Fabric discovery

Resource allocation

QoS telemetry support

Connectivity management

Security management

Device health management

Real-world Use Cases

AI Training Infrastructure

Accelerate machine learning workloads with high-bandwidth memory expansion and GPU interconnects.

Ideal for large-scale AI training environments requiring massive memory capacity and ultra-low latency communication between accelerators.

Data Center Expansion

Scale your data center infrastructure with flexible CXL device connectivity and memory disaggregation.

Enables dynamic resource allocation and improves overall system efficiency in enterprise environments with growing computational demands.

HPC Cluster Computing

Connect thousands of compute nodes with ultra-low latency for high-performance computing workloads.

Ideal for scientific computing, simulation, and research applications requiring massive parallel processing and fast inter-node communication.