Comprehensive Portfolio of Device-Specific CXL 3.2 IP

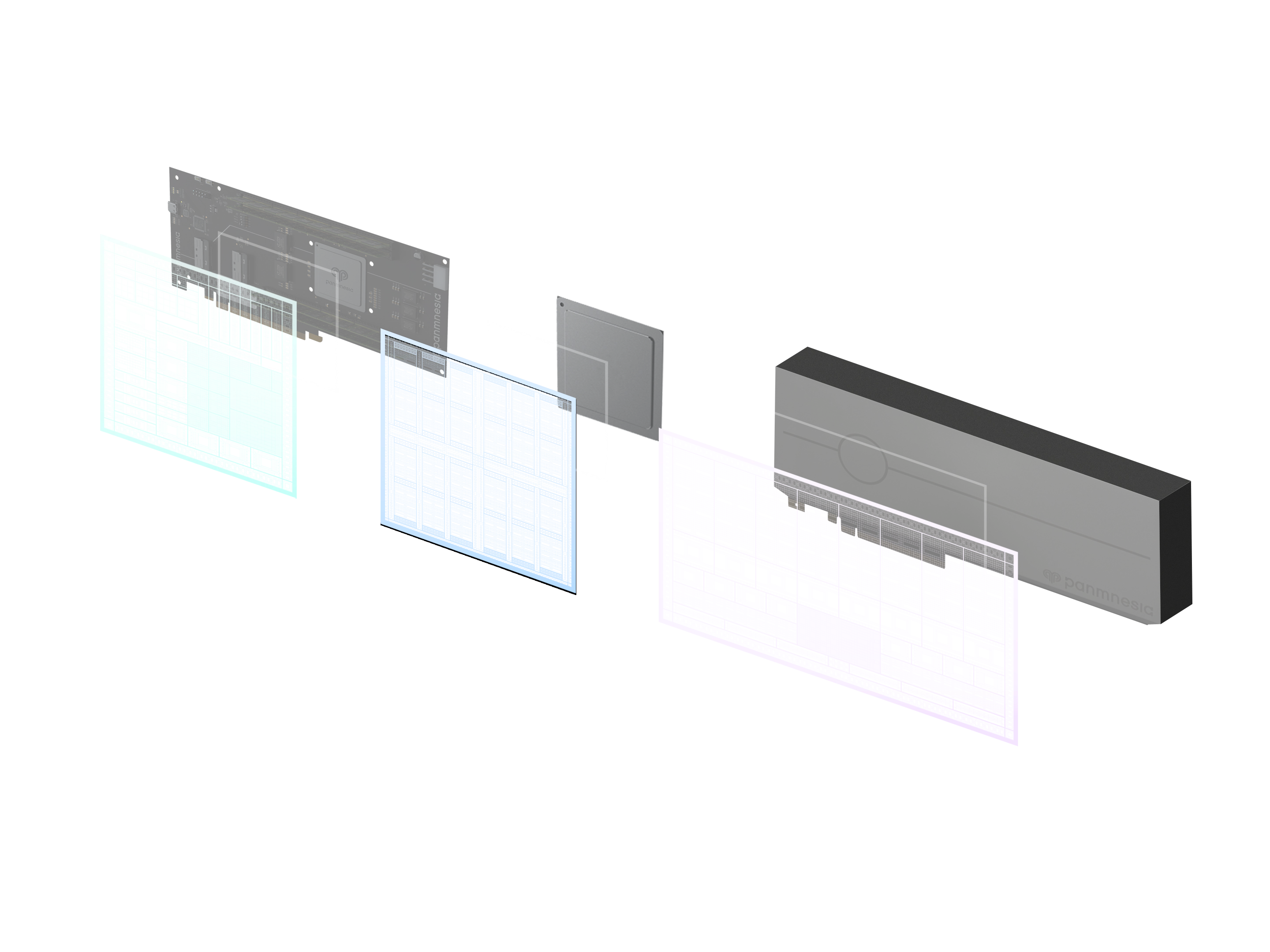

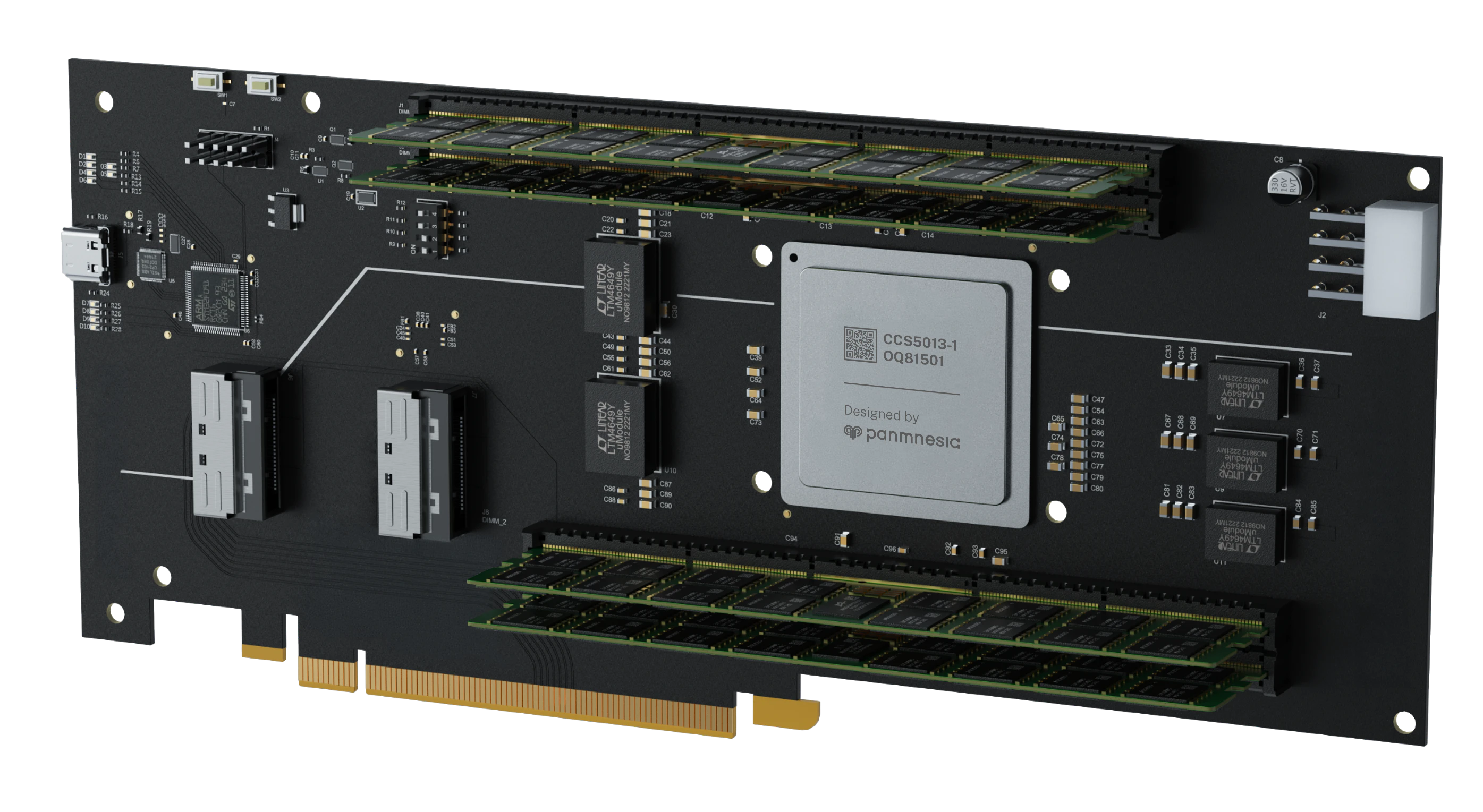

Panmnesia's Link Acceleration Unit (LAU) IP is a purpose-built hardware block that accelerates device-specific, end-to-end communication over CXL. The IP significantly reduces time-to-market for diverse types of hardware design such as memory expander devices (CXL type 3), accelerators (CXL type 1/2), and CPUs.

Key Benefits

Hardware-Managed Cache Coherency

Manage cache coherency without software intervention. LAU IP supports hardware-automated handling of cache coherency features, such as back invalidation, for diverse types of devices.

Device-Specific Hardware Optimization

Minimize communication latency and deliver fast, deterministic responsiveness in latency-critical workloads. LAU IP offloads device-specific processes into hardware, enabling real-time execution when every nanosecond counts.

Best-In-Class Latency & Power Consumption

Achieve exceptional latency and power efficiency with a purpose-built, optimized architecture. Maximize performance-per-watt for power-constrained and performance-critical designs.

Key Features

Our product in more details

Specification | CXL 3.2 Backward compatible with CXL 1.1, 2.0, and PCIe Gen 6 |

Optimized system support | Optimized for CXL CPU, CXL Switch, CXL GPU/xPU, CXL DRAM, etc. |

Supported subprotocols | CXL.io, CXL.mem, CXL.cache P2P communication support Dynamic Capacity Device (DCD) support Device COHerence engine (DCOH) support Back-invalidate snoop coherence support Bias-based coherency model support Snoop filter support Protocol conversion options support |

Supported device types | CXL type 1/2/3 device support Multi-Logical Device (MLD) support Multi-Headed Device (MHD) support Fabric-Attached Memory (FAM) support (G-FAM, LD-FAM) |

All specifications are subject to change without notice. Performance may vary based on system configuration and usage patterns.

Available Device & Sub Protocols

Overview of protocol compatibility across different CXL endpoint types and configurations

| Protocols | Type 1 Endpoint | Type 2 Endpoint | Type 3 Endpoint | Root Complex |

|---|---|---|---|---|

| Description | Accelerator devices with their cache connected to the CXL network (e.g., ASIC, xPU) | Accelerator devices with their memory connected to the CXL network (e.g., GPU, xPU) | Memory expander devices providing memory capacity to the CXL network | CPU being root of the virtual hierarchy in the CXL network |

| CXL.io | ||||

| CXL.cache | ||||

| CXL.mem |

Real-world Use Cases

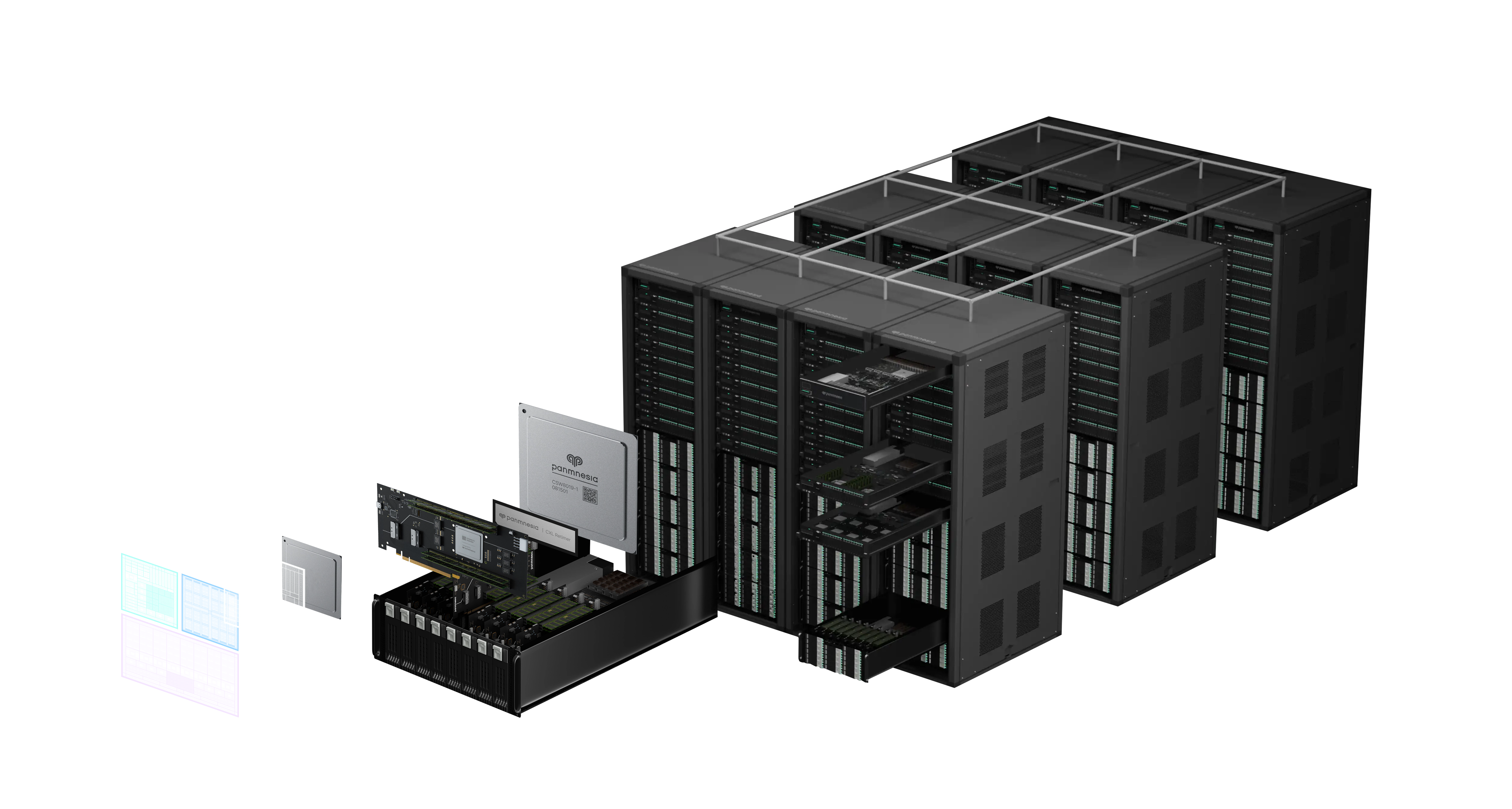

AI Training Infrastructure

Accelerate machine learning workloads with high-bandwidth memory expansion and GPU interconnects.

Ideal for large-scale AI training environments requiring massive memory capacity and ultra-low latency communication between accelerators.

Data Center Expansion

Scale your data center infrastructure with flexible CXL device connectivity and memory disaggregation.

Enables dynamic resource allocation and improves overall system efficiency in enterprise environments with growing computational demands.

HPC Cluster Computing

Connect thousands of compute nodes with ultra-low latency for high-performance computing workloads.

Ideal for scientific computing, simulation, and research applications requiring massive parallel processing and fast inter-node communication.